Cubase's audio warp facilities provide a powerful toolkit for manipulating the timing of recorded audio to fit grids and grooves.

Back in September, I looked at applications of the off-line Time Stretch function. Under the broad heading of 'audio warp', Cubase also features some non-destructive tempo and pitch-shifting tools. These provide a number of creative and corrective possibilities, but the emphasis of this sort of processing is mainly on loops, so I'll concentrate upon loop-based applications in this column.

The audio warp capabilities of Cubase that we're about to explore are undoubtedly very powerful, but I'd offer a word of caution: much as I like Cubase's audio warp functionality, if I'm working with projects that are dominated by commercial sample library loops and require simple tempo-matching, I'll usually go instead for a tool designed specifically for that job (in my case, Sony's Acid Pro). However, when it's just a few loops that require processing, or I want to create loops from performances I've recorded myself, or if something more than basic tempo-matching is needed, audio warp is definitely up to the task.

Coming To Terms With The Terms

Before getting to grips with practicalities, three terms need to be understood: 'hitpoints', 'warp tabs' and 'Q-points'. In essence, these can all be thought of as 'markers' that are positioned within an audio clip, and control how Cubase then manipulates the timing of the audio in the clip.

Audio warp is useful for correcting timing drifts in live performances, as shown here with an acoustic guitar part. Warp tabs (in orange) have been used to drag the chord to the starts of bars 18-21 so that the whole performance will play in time with the project tempo. The chord at the start of bar 22, which is a little late compared with the tempo-based grid, has yet to be processed.Warp tabs are usually placed at particularly important musical time positions. For example, they might be placed at the first beat of every bar in an audio event. Once the warp tabs are in place, the playback of the audio event can then, for example, be stretched to force any tempo variation in the performance to match the exact tempo of the project (I'm not suggesting metronomic music is always a good thing, just that this can be done if required).

Audio warp is useful for correcting timing drifts in live performances, as shown here with an acoustic guitar part. Warp tabs (in orange) have been used to drag the chord to the starts of bars 18-21 so that the whole performance will play in time with the project tempo. The chord at the start of bar 22, which is a little late compared with the tempo-based grid, has yet to be processed.Warp tabs are usually placed at particularly important musical time positions. For example, they might be placed at the first beat of every bar in an audio event. Once the warp tabs are in place, the playback of the audio event can then, for example, be stretched to force any tempo variation in the performance to match the exact tempo of the project (I'm not suggesting metronomic music is always a good thing, just that this can be done if required).

In contrast, hitpoints are usually placed at attack transients in an audio file — the most obvious example would be hitpoints at the start of each drum hit in a drum loop. If both warp tabs and hitpoints were placed in the same audio performance, some of them may well coincide (for example, at the attack of a well-timed kick drum on the first beat of the bar) but others might not, particularly if the musical performance plays off the beat. It is in these circumstances that the distinction between warp tabs and hitpoints becomes clear: the former are used to mark the positions of musically important intervals such as bars and beats within a performance, while the latter pick out every rhythmic event within the audio, and thus may be positioned off the beat as well as on it.

Less commonly used are Q-points. These can be thought of as extra 'markers' between two hitpoints and they're useful if a particular hit has either a slow attack or some sort of secondary peak. In the case of the slow attack, the hitpoint might be located at the start of the sound, but the rhythmically important point is where the sound peaks, as this is the section of the sound that needs to be locked into any quantising or time-stretching that is being done. Placing a Q-point at the peak gives the audio warp process more information to go on, so that the time-shifting is achieved in a more musically appropriate fashion.

To The Point

The main application of warp tabs is to get an audio file with a varying tempo to play in time with a fixed project tempo. Useful though that can be in some circumstances (for example, when you have a live take that requires a little more consistency in terms of tempo, so that it can be used as a basis for overdubbing), perhaps the most fun is to be had with hitpoints, so let's concentrate on what these can do for us. The Cubase Operations Manual describes the basic steps involved in creating and editing hitpoints, so only a brief recap is required here. I'll then move on to focus on some uses for these tools.

Hitpoints (in blue) added to a drum loop. Light blue indicates a hitpoint added automatically, based upon the Hitpoint Sensitivity slider setting, while dark blue hitpoints are those added manually.

Hitpoints (in blue) added to a drum loop. Light blue indicates a hitpoint added automatically, based upon the Hitpoint Sensitivity slider setting, while dark blue hitpoints are those added manually.![]() The toolbar for the Sample Editor contains the key buttons. In the strip at the left-hand end is the Hitpoint Edit button (selected and displayed in blue). Immediately to its right are the Audio Tempo Definition tool and the Warp Samples buttons. Further to the right are the Hitpoint Mode button (also in blue) and the Hitpoint Sensitivity slider. The button with the musical note icon (also in blue) is the Musical Mode button, while the Warp Setting drop-down menu allows the type of material to be specified.Creating hitpoints is a simple, four-step process. First, the loop is opened in the Sample Editor by double-clicking on the event in the Project window. Second, the Audio Tempo Definition Tool is used to specify the length and time signature of the loop — and from this the original tempo is calculated automatically. Third, the Hitpoint Mode button is selected and a series of hitpoints is then calculated automatically. Finally, the number of hitpoints can be adjusted via the Sensitivity slider, and hitpoints can be manually adjusted via the mouse — what you are aiming for here is to tidy up the hitpoint positions, so that each prominent note or drum hit attack has its own hitpoint positioned at its start.

The toolbar for the Sample Editor contains the key buttons. In the strip at the left-hand end is the Hitpoint Edit button (selected and displayed in blue). Immediately to its right are the Audio Tempo Definition tool and the Warp Samples buttons. Further to the right are the Hitpoint Mode button (also in blue) and the Hitpoint Sensitivity slider. The button with the musical note icon (also in blue) is the Musical Mode button, while the Warp Setting drop-down menu allows the type of material to be specified.Creating hitpoints is a simple, four-step process. First, the loop is opened in the Sample Editor by double-clicking on the event in the Project window. Second, the Audio Tempo Definition Tool is used to specify the length and time signature of the loop — and from this the original tempo is calculated automatically. Third, the Hitpoint Mode button is selected and a series of hitpoints is then calculated automatically. Finally, the number of hitpoints can be adjusted via the Sensitivity slider, and hitpoints can be manually adjusted via the mouse — what you are aiming for here is to tidy up the hitpoint positions, so that each prominent note or drum hit attack has its own hitpoint positioned at its start.

Depending on what you want to use the hitpoints for (see below), some further manual editing might be required. With Hitpoint Mode selected in the Sample Editor, individual hitpoints can be dragged by placing the mouse on their handles (the small triangles that appear at the top of each hitpoint). If required, hitpoints can be deleted by holding the Alt key while hovering over the handle. Holding down the Alt key anywhere else turns the cursor into a pencil tool and allows new hitpoints to be added.

While this sounds like quite a bit of work, in practice (and with practice!) it usually takes just a few seconds, and the loop is then ready for some corrective or creative manipulation. If all you want the loop to do is follow the project tempo, then clicking the Musical Mode button in the Sample Editor will do the trick: the loop will then play in sync with the project and any tempo changes within it.

Here's one further brief trick, if creating loops from your own recordings. Prior to creating the hitpoints, first make the usual adjustments to the event in the Project window or Sample Editor to isolate just the section you want to loop. Then, with the event selected in the Project window, choose 'Audio / Bounce Selection', and when prompted replace the original Event with the bounced copy. In my experience, this makes it easier to use the Audio Tempo Definition tool to define the details needed to apply Musical Mode for basic tempo-matching.

Groovy Baby!

Tempo-matching aside, one of the most useful creative options provided by audio warp is the ability to groove-quantise audio — that is, to take the groove from one audio performance (for example, a drum loop) and apply it to another (for example, a bass or guitar loop). This can be musically very satisfying, as it is often the slight variations in the groove (playing slightly ahead or slightly behind the strict beat defined by the tempo) that can give a performance its distinct feel. The technique can be particularly useful if you are combining commercial library loops from different sources; a little judicious use of groove quantise can help match the rhythmic timing of the playing more tightly.

Once a groove has been extracted, it appears as a preset in the Quantize Type drop-down menu.

Once a groove has been extracted, it appears as a preset in the Quantize Type drop-down menu. The bass loop in track 2 has been groove-quantised, based upon a groove that was extracted from the drum loop in track 1. Comparing the quantised bass with the original (track 3) immediately shows how some of the bass notes have been repositioned to provide a tighter match to the drum hits.

The bass loop in track 2 has been groove-quantised, based upon a groove that was extracted from the drum loop in track 1. Comparing the quantised bass with the original (track 3) immediately shows how some of the bass notes have been repositioned to provide a tighter match to the drum hits. Three versions of the same drum loop: the original; beat-sliced as an audio part; and beat-sliced and dissolved into individual audio events.Unsurprisingly, the most common starting point for groove quantising is a drum loop, although there is no reason why another instrument cannot be used as the basis for defining the groove. It is worth spending a little extra time on hitpoint placement, by auditioning each section of the loop to ensure the hitpoint is positioned correctly on the attack of each drum hit. Once this is done, the 'Audio / Hitpoints / Create Groove Quantize From Hitpoints' option can be selected. This extracts the groove and adds it to the list of groove presets available from the drop-down Quantize Type menu in the Project window.

Three versions of the same drum loop: the original; beat-sliced as an audio part; and beat-sliced and dissolved into individual audio events.Unsurprisingly, the most common starting point for groove quantising is a drum loop, although there is no reason why another instrument cannot be used as the basis for defining the groove. It is worth spending a little extra time on hitpoint placement, by auditioning each section of the loop to ensure the hitpoint is positioned correctly on the attack of each drum hit. Once this is done, the 'Audio / Hitpoints / Create Groove Quantize From Hitpoints' option can be selected. This extracts the groove and adds it to the list of groove presets available from the drop-down Quantize Type menu in the Project window.

The groove can then be applied to any other audio performance in the project. Selecting a suitable audio event and then choosing 'Audio / Realtime Processing / Quantize Audio' will apply whatever quantise groove is currently selected in the Quantize Type drop-down menu. When you first start experimenting with this process, it can be handy to make a duplicate copy of the audio track prior to applying the groove. If you then apply Bounce Selection (as described above) to the audio event on the duplicate track, pan it hard right, and then apply the groove quantise to the original event and pan its track hard left, it becomes easy to hear exactly what the groove quantise is doing and identify how well the process has worked.

The Slice Is Right

The other obvious use of audio warp is for ReCycle-style beat-slicing: in essence, each hitpoint is used to define a slice in the loop, and individual slices can then be manipulated. Once the loop has been cut into its individual slices, the options include being able to create variations of the loop's performance, making entirely new loops based on sounds within individual slices, replacing sounds in a loop and processing individual slices. Again, drum loops are prime candidates for the beat-slicing process.

Having created and edited the hitpoints in your loop, the only other detail to observe before beat-slicing is that Musical Mode is not engaged in the Sample Editor before returning to the Project window. With the audio event selected, the 'Audio / Hitpoints / Create Slices From Hitpoints' menu option will create the slices and tempo-match the loop to the project. The original audio event is replaced in the Project window by a new audio part (an audio part contains a series of linked audio events) in which the slices are held as individual audio events.

While the sliced loop provides tempo-matching, the key reason for slicing is the further editing options it opens up. Double-clicking on the audio part in the Project window will open it in the Audio Part Editor and this provides plenty of editing options. Alternatively, you can select the 'Audio / Dissolve Part' menu option, which replaces the audio part with individual audio events, each of which represents one slice, and editing can then be done in the Project window. Before doing any editing, it is a good idea to make a copy of the audio part. If you then make any changes to this copy, Cubase will prompt you to create a 'New Version', and if you do so the original will be left intact.

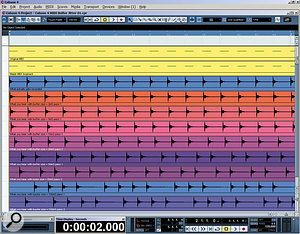

In this example, a beat-sliced drum loop has been dissolved and edited on three tracks to create a variation on the original. The kick-drum slices have been moved to track 2 for separate EQ and compression, while on the third track a kick extracted from a different loop has been used to emphasise beat 1, the snare slice has been reversed (and gain reduced) ahead of beat 2 and an additional kick-drum hit has been added after beat 4.A few brief examples illustrate the possibilities of working with the beat-sliced loop. For example, as shown in the screenshot on the right, a drum loop is being edited in the Project window, having been dissolved into its individual audio events. The kick-drum slices have been moved to a fresh track (making sure that their position is maintained by selecting the Grid Relative setting for snapping). Separate processing can then be applied to the kick — perhaps changing its level, adjusting its EQ or applying some compression. Similar things could obviously be done with other elements from the loop.

In this example, a beat-sliced drum loop has been dissolved and edited on three tracks to create a variation on the original. The kick-drum slices have been moved to track 2 for separate EQ and compression, while on the third track a kick extracted from a different loop has been used to emphasise beat 1, the snare slice has been reversed (and gain reduced) ahead of beat 2 and an additional kick-drum hit has been added after beat 4.A few brief examples illustrate the possibilities of working with the beat-sliced loop. For example, as shown in the screenshot on the right, a drum loop is being edited in the Project window, having been dissolved into its individual audio events. The kick-drum slices have been moved to a fresh track (making sure that their position is maintained by selecting the Grid Relative setting for snapping). Separate processing can then be applied to the kick — perhaps changing its level, adjusting its EQ or applying some compression. Similar things could obviously be done with other elements from the loop.

A second possibility is the replacement of individual hits within a loop. You sometimes find that a loop sounds right musically, but has a weak snare or kick. Being able to isolate the individual sounds through beat-slicing allows them to be replaced. This might involve taking a snare or kick sound from one loop and using it to replace (or perhaps layer with) the same drum sound in another loop. Again, arranging the loops to be used in a series of tracks in the Project window makes the whole process easy to perform.

Finally, variations on the original loop can be created by processing, moving, muting or adding individual hits. For example, extra kick-drum hits might be added to make the loop a little busier. These can easily be added on another track, and once you are happy with the loop variation the two tracks can be bounced down (via the Export / Audio Mixdown option) to create a single audio event (the latter will be easier to handle if you need to copy and paste it to build a complete drum track).

And Finally...

These brief examples really only scratch the surface of what the Cubase audio warp features are capable of doing. However, if you do work with loops as part of your music-making process, Cubase's audio warp functionality is most certainly worth taking the time to explore. While simple tempo-matching is, in itself, extremely useful, the creative possibilities go way beyond that, and with groove quantise and beat-slicing Cubase can hep you get considerable extra mileage from any loop-library material that you might own.