Welcome to No Limit Sound Productions. Where there are no limits! Enjoy your visit!

Welcome to No Limit Sound Productions

| Company Founded | 2005 |

|---|

| Overview | Our services include Sound Engineering, Audio Post-Production, System Upgrades and Equipment Consulting. |

|---|---|

| Mission | Our mission is to provide excellent quality and service to our customers. We do customized service. |

Saturday, June 29, 2019

Friday, June 28, 2019

Q. How do audio and video stay in sync?

By Hugh Robjohns

I'm having some problems understanding exactly how audio-to-video synchronisation works. I know that the clock generates a pulse — it gives off a voltage at a certain frequency. But how does this keep the sound and the pictures in time?

SOS Forum post

Technical Editor Hugh Robjohns replies: The pulses generated by the clock generator are just that — pulses — but at a very precisely controlled rate. These are intended only to control the rate at which pictures or audio samples are taken, or the speed at which an analogue tape is dragged past the recording heads.

The missing link that provides the positional information is the timecode generator, and all this does is count the pulses from some arbitrarily agreed starting point, and keep track of that count as timecode within the recording medium somewhere.

When replaying, the replay clock generator provides more pulses to determine the replay speed of the medium, and the timecode numbers identify which bit of sound is supposed to align to which pictures.

Let's consider the example of pictures on a video tape, and sound on a separate digital recorder of some sort, imagining that you want to replay both together in perfect sync.

The 'rules' for this kind of material are that there must be 25 picture frames every second (sticking with European standards here for simplicity — the US ones are different) and 1920 audio samples every second (for the standard 48kHz sample rate), and that the first of each batch of samples must align precisely with the start of each picture frame. This last point is to facilitate editing, so that when cutting on a picture boundary you don't end up cutting halfway through an audio sample!

To maintain these rules when recording, both the video camera and the digital sound recorder have to be running at the same very precisely controlled rate, and this is provided by something called a 'sync pulse generator' or SPG. It provides a continuous series of very precise pulses at the rate(s) required by the equipment to ensure that they capture 25 picture frames and sample 1920 audio samples every second.

In a studio setting, there will be one SPG which originates all the required timing pulses which are then distributed as required. On location, this is a little impractical as it is often desirable to have camera and sound moving independently of each other, so sync cables connecting the two back to a central SPG is not a great idea. In this case, the usual solution is to equip the camera and the sound recorder with their own internal SPG systems, and to synchronise these to each other at regular intervals. Crystal oscillators are extremely stable these days and once matched to each other, they will drift relative to each other very slowly indeed. Provided they are re-sync'ed every couple of hours (usually whenever changing batteries or tapes, in practice) they will remain sufficiently close to each other's timing to be fixed together.

So that takes care of the rate at which pictures and sound are captured. The next step is to make sure that if separated, the pictures can subsequently be linked to the correct sound. This is achieved by using timecode. Timecode is simply a series of numbers (generally in the time format of hours, minutes, seconds and frames, but it could just as easily be a continuous stream of consecutive numbers. The timecode simply counts the SPG pulses in order to allocate each picture frame (or each block of 1920 audio samples) with a unique number from which it can later be identified.

When the camera and sound SPGs are synchronised to run at the same rate, their associated timecode generators are also synchronised so that they start counting picture frames (or blocks of 1920 samples) from the same point. After that, the camera and sound recorder can do their own thing, safe in the knowledge that the rate at which the picture frames are being shot, and sound samples are being captured are identical, and that each is being identified with the same timecode number sequence.

It is worth noting that the pulses and counting have to continue whether the recorders are actually recording anything or not. In practice, and purely for convenience the timecode numbers are usually aligned to the actual time of day (TOD) when working in this mode, and hence this is often called 'TOD working'. Obviously, this practice will result in discontinous timecode sequencing on the recorded tape as the recordings are started and stopped throughout the shooting day. Most editing controllers or workstations don't like that much, so it is usual to record for at least 10 seconds before the wanted material to make editing easier (although it is not disastrous if this can't be achieved).

In post-production, when it comes to putting the pictures and sound together, the rate at which the pictures are reproduced will be controlled by the pulses from the studio's SPG. The same SPG will also provide suitable pulses to control the rate at which the digital sound system produces audio samples — so we know that pictures and sound are being replayed at exactly the same rate that they were filmed at.

Now we just need to make sure they both start at the appropriate place, and this is where the timecode comes in. A suitable start point will be identified on the video source and the corresponding timecode noted. The sound system will then search for the same timecode number within its recordings and position itself accordingly. When the picture and sound replay is started, the speed of the two sources is controlled by the SPG, and the system will 'nudge' one of them (usually the sound, but not always) backwards or forwards slightly until the timecode information aligns precisely. Once aligned, the only thing controlling the speed is the pulses from the SPG.

In the case of video equipment, the required pulses from the SPG are provided in the form of a composite combination of pulses called 'video B&B' (Black and Burst) or 'Colour Black'. This signal contains the vertical sync pulses that define the start and end of each picture frame, the horizontal sync pulses to define the start and end of each picture line, and the colour subcarrier which is used to make sure the colour information is coded correctly. In the case of digital audio equipment, the required pulses from the SPG are usually simple pulses at the sample rate — a signal called 'word clock'. Some systems will also use the AES or even S/PDIF composite signal, which embeds the word-clock information with the audio data.

Some video SPGs can also generate digital word clocks directly. In most cases, though, a B&B signal from the sync pulse generator has to be used as a reference to a digital clock generator, which then generates suitable digital word clocks for the digital equipment, locked to the video sync pulse generator.

Published October 2003

Thursday, June 27, 2019

Wednesday, June 26, 2019

Q. Are all Decibels equal?

By Hugh Robjohns

Equipment specifications often give input and output levels with units like dBu, dBV and dBFS. What's the difference?

Andrew Dunn

Technical Editor Hugh Robjohns replies: The decibel (dB) is a handy mathematical way of expressing a ratio between two quantities. It's handy for audio equipment because it is a logarithmic system, and our ears behave in a logarithmic fashion, so the two seem to suit each other well, and the results become more meaningful and consistent.

This chart compares the scale of various types of meter in relation to dBu values (left) and dBV values (right).

This chart compares the scale of various types of meter in relation to dBu values (left) and dBV values (right).

Ratio dB = 20 log (Vout/Vin)

Sometimes, rather than compare two arbitrary signals in this way, we want to compare one signal with a defined reference level. That reference level is indicated by adding a suffix to the dB term. The three common ones in use in audio equipment are those you've asked about.

The standard reference level for audio signals in professional environments was defined a long time ago as being the level achieved when 1mW of power was dissipated in a 600Ω load. The origins are tied up in telecommunications and needn't concern us, but the salient fact is that 1mW in 600Ω produces an average (rms) voltage of 0.775V.

If you compare the reference level with itself, the dB figure is 0dB (the log of 1 is 0) — so the reference level described above was known as 0dBm — the 'm' referring to milliWatt.

Fortunately, the practice of terminating audio lines in 600Ω has long since been abandoned in (most) audio environments, but the reference voltage has remained in use. To differentiate between the old dBm (requiring 600Ω terminations) and the new system which doesn't require terminating, we use the descriptor: 0dBu — the 'u' meaning 'unterminated.' Thus, 0dBu means a voltage reference of 0.775V (rms), irrespective of load impedance. A lot of professional systems adopt a higher standard reference level of +4dBu, which is a voltage of 1.228V (rms).

Domestic and semi-professional equipment generally doesn't operate with such high signal levels as professional equipment, so a lower reference voltage was defined. This is -10dBV, where the 'V' suffix refers to 1Volt — hence the reference is 10dB below 1V, which is 0.316mV (rms).

It is often useful to know the difference between 0dBu and 0dBV, and those equipped with a scientific calculator will be able to figure it out easily enough. For everyone else, the difference is 8.8dB — and the difference between +4dBu and -10dBV is 11.8dB. So in round numbers, converting from semi-pro to pro levels requires 12dB of extra gain, and going the other way needs a 12dB pad.

The third common decibel term — dBFS — is used in digital systems. The only valid reference point in a digital system is the maximum quantising level or 'full scale' and this is what the 'FS' suffix relates to. So we have to count down from the top and signals usually have negative values.

To make life easier, we build in a headroom allowance, and define it in terms of a nominal working level. This is generally taken to be -18dBFS in Europe which equates to 0dBu when interfacing with analogue equipment, or -20dBFS in America which equates to +4dBu in analogue equipment.

Published October 2003

Tuesday, June 25, 2019

Monday, June 24, 2019

Q. What's the difference between a talk box and a vocoder?

By Craig Anderton

In addition to its built-in microphone, the Korg MS2000B's vocoder accepts external line inputs for both the carrier and modulator signals.

In addition to its built-in microphone, the Korg MS2000B's vocoder accepts external line inputs for both the carrier and modulator signals.

I've heard various 'talking instrument' effects which some people attribute to a processor called a vocoder, while others describe it as a 'talk box'. Are these the same devices? I've also seen references in some of Craig Anderton's articles about using vocoders to do 'drumcoding'. How is this different from vocoding, and does it produce talking instrument sounds?

James Hoskins

SOS Contributor Craig Anderton replies: A 'talk box' is an electromechanical device that produces talking instrument sounds. It was a popular effect in the '70s and was used by Peter Frampton, Joe Walsh and Stevie Wonder [ see this YouTube video], amongst others. It works by amplifying the instrument you want to make 'talk' (often a guitar), and then sending the amplified signal to a horn-type driver, whose output goes to a short, flexible piece of tubing. This terminates in the performer's mouth, which is positioned close to a mic feeding a PA or other sound system. As the performer says words, the mouth acts like a mechanical filter for the acoustic signal coming in from the tube, and the mic picks up the resulting, filtered sound. Thanks to the recent upsurge of interest in vintage effects, several companies have begun producing talk boxes again, including Dunlop (the reissued Heil Talk Box) and Danelectro, whose Free Speech talk box doesn't require an external mic, effecting the signal directly.

The vocoder, however, is an entirely different animal. The forerunner to today's vocoder was invented in the 1930s for telecommunications applications by an engineer named Homer Dudley; modern versions create 'talking instrument' effects through purely electronic means. A vocoder has two inputs: one for an instrument (the carrier input), and one for a microphone or other signal source (the modulator input, sometimes called the analysed input). Talking into the microphone superimposes vocal effects on whatever is plugged into the instrument input.

The principle of operation is that the microphone feeds several paralleled filters, each of which covers a narrow frequency band. This is electronically similar to a graphic equaliser. We need to separate the mic input into these different filter sections because in human speech, different sounds are associated with different parts of the frequency spectrum.

For example, an 'S' sound contains lots of high frequencies. So, when you speak an 'S' into the mic, the higher-frequency filters fed by the mic will have an output, while there will be no output from the lower-frequency filters. On the other hand, plosive sounds (such as 'P' and 'B') contain lots of low-frequency energy. Speaking one of these sounds into the microphone will give an output from the low-frequency filters. Vowel sounds produce outputs at the various mid-range filters.

But this is only half the picture. The instrument channel, like the mic channel, also splits into several different filters and these are tuned to the same frequencies as the filters used with the mic input. However, these filters include DCAs or VCAs (digitally controlled or voltage-controlled amplifiers) at their outputs. These amplifiers respond to the signals generated by the mic channel filters; more signal going through a particular mic channel filter raises the amp's gain.

Now consider what happens when you play a note into the instrument input while speaking into the mic input. If an output occurs from the mic's lowest-frequency filter, then that output controls the amplifier of the instrument's lowest filter, and allows the corresponding frequencies from the instrument input to pass. If an output occurs from the mic's highest-frequency filter, then that output controls the instrument input's highest-frequency filter, and passes any instrument signals present at that frequency.

As you speak, the various mic filters produce output signals that correspond to the energies present at different frequencies in your voice. By controlling a set of equivalent filters connected to the instrument, you superimpose a replica of the voice's energy patterns on to the sound of the instrument plugged into the instrument input. This produces accurate, intelligible vocal effects.

Vocoders can be used for much more than talking instrument effects. For example, you can play drums into the microphone input instead of voice, and use this to control a keyboard (I've called this 'drumcoding' in previous articles). When you hit the snare drum, that will activate some of the mid-range vocoder filters. Hitting the bass drum will activate the lower vocoder filters, and hitting the cymbals will cause responses in the upper frequency vocoder filters. So, the keyboard will be accented by the drums in a highly rhythmic way. This also works well for accenting bass and guitar parts with drums.

Note that for best results, the instrument signal should have plenty of harmonics, or the filters won't have much to work on.

Published October 2003

Saturday, June 22, 2019

Friday, June 21, 2019

Q. Do I need a dedicated FM soft synth?

By Craig Anderton

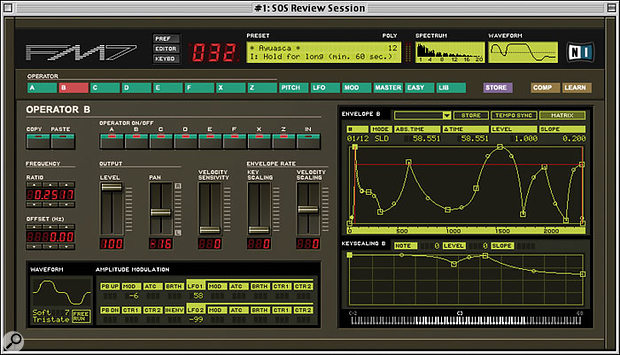

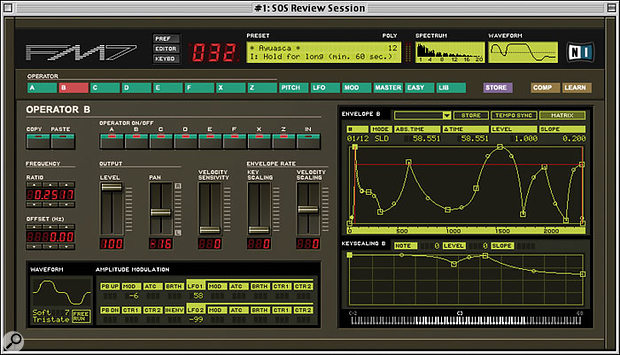

Native Instruments' FM7 soft synth is equipped with six operators, each with a 31-stage envelope which can be edited from this screen.

Native Instruments' FM7 soft synth is equipped with six operators, each with a 31-stage envelope which can be edited from this screen.

I'm too young to have experienced the first wave of FM synthesis, but I find those sounds very interesting. I was considering buying Native Instruments' FM7 soft synth, but I've noticed that many virtual analogue soft synths claim to offer FM capabilities, and provide virtual analogue besides. Is there any advantage to the extra expense of something like the FM7?

Richard Ledbetter

SOS Contributor Craig Anderton replies: If your interest in FM synthesis is at all serious, then yes, there are advantages to dedicated FM soft synths like Native Instruments' FM7. But to understand why, it might first be helpful to explore some FM synthesis basics.

The basic 'building block' of FM synthesis is called an operator. This consists of an oscillator (traditionally a sine wave, but other waveforms are sometimes used), a DCA to control its output, and an envelope to control the DCA. But the most important aspect is that the oscillator has a control input to vary its frequency.

Feeding one operator's output into another operator's control input creates complex modulations that generate 'side-band' frequencies. The operator being controlled is called the carrier; the one doing the modulation is called the modulator. The sideband frequencies are mathematically related to the modulator and carrier frequencies. (Note that the modulator signal needs to be in the audio range. If it's sub-audio, then varying the carrier's frequency simply creates vibrato.)

Operators can be arranged in many ways, each of which is called an algorithm. Basic Yamaha FM synths of the mid-80s had four operators. A simple algorithm suitable for creating classic organ sounds is to feed each operator's output to a summed audio output, in which case each operator is a carrier. A more complex algorithm might feed the output from two operators to the audio output, with each being modulated by one of the other operators. Or, Operator 1 might modulate Operator 2, which modulates Operator 3, which modulates Operator 4, which then feeds the audio output. The latter can produce extremely complex modulations. Sometimes feedback among operators is also possible, which makes things even more interesting.

So more operators means more algorithm options. The Yamaha DX7 had six operators and 32 fixed algorithms; FM7 actually offers considerably more power because it lets you create your own algorithms.

A virtual analogue synth that offers FM typically allows one oscillator to modulate another one, essentially serving as a primitive two-operator FM synth with one algorithm. While this is enough to let you experiment a bit with simple FM effects, the range of sounds is extremely limited compared to the real thing. You'll be able to get some nice clangy effects and maybe even some cool bass and brass sounds, but don't expect much more than that unless there are multiple operators and algorithms.

Published November 2003

Thursday, June 20, 2019

Wednesday, June 19, 2019

Q. Should I use my PC's ACPI mode?

By Martin Walker

I've just bought a PC laptop and a MOTU 828. I installed the software and have been using Emagic Logic 5.5. However when I turn off the computer's Advanced Configuration Power Interface (ACPI) it no longer recognises that the 828 is there. This is a common PC/Windows tweak for making audio apps run more efficiently, so I was hoping that the 828, or any other Firewire hardware for that matter, would be able to run with ACPI turned off. Can anyone enlighten me on this subject?

SOS Forum Post

SOS PC Notes columnist Martin Walker replies: It's strange that your MOTU 828 is no longer recognised, since Plug and Play will still detect exactly the same set of devices when you boot your PC, including the host controllers for both your USB and Firewire ports. It's then up to Windows to detect the devices plugged into their ports, and this ought not to be any different when running under ACPI or Standard mode.

MOTU have reported some problems running their 828 interface with Dell Inspiron laptops, due to IRQ sharing between the Firewire and graphics, and this could possibly be cured by changing to Standard Mode, but there's a much wider issue here. While switching from ACPI to Standard Mode has solved quite a few problems for some musicians in the past (those with M-Audio soundcards have certainly benefitted), it shouldn't be used as a general-purpose cure-all, and particularly not with Windows XP, which, I suspect, is installed on your recently bought PC laptop.

Since Windows XP needs very little tweaking compared with the Windows 9x platform, I would recommend all PC Musicians leave their machines running in ACPI mode on XP. I'd only suggest turning it off if there is some unresolved problem such as occasional audio clicks and pops that won't go away with any other OS tweaks, no matter how you set the soundcard's buffer size, or the inability to run with an audio latency lower than about 12ms without glitching.

Some modern motherboards now offer an Advanced Programmable Interrupt Controller (APIC) that offers 24 interrupts under Windows XP in ACPI mode rather than the 16 available to Standard Mode, so if yours provides this feature you should always stick to ACPI. Apparently it's also faster at task-switching, leaving a tiny amount more CPU for running applications. The latest hyperthreading processors also require ACPI to be enabled to use this technology, since each logical processor has its own local APIC. Moreover, laptops benefit from ACPI far more than desktop PCs, since it's integrated with various Power Management features that extend battery life. If I were you, I'd revert to ACPI.

Published October 2003

Tuesday, June 18, 2019

Monday, June 17, 2019

Q. Are all Decibels equal?

By Hugh Robjohns

Equipment specifications often give input and output levels with units like dBu, dBV and dBFS. What's the difference?

Andrew Dunn

Technical Editor Hugh Robjohns replies: The decibel (dB) is a handy mathematical way of expressing a ratio between two quantities. It's handy for audio equipment because it is a logarithmic system, and our ears behave in a logarithmic fashion, so the two seem to suit each other well, and the results become more meaningful and consistent.

This chart compares the scale of various types of meter in relation to dBu values (left) and dBV values (right).

This chart compares the scale of various types of meter in relation to dBu values (left) and dBV values (right).

Ratio dB = 20 log (Vout/Vin)

Sometimes, rather than compare two arbitrary signals in this way, we want to compare one signal with a defined reference level. That reference level is indicated by adding a suffix to the dB term. The three common ones in use in audio equipment are those you've asked about.

The standard reference level for audio signals in professional environments was defined a long time ago as being the level achieved when 1mW of power was dissipated in a 600Ω load. The origins are tied up in telecommunications and needn't concern us, but the salient fact is that 1mW in 600Ω produces an average (rms) voltage of 0.775V.

If you compare the reference level with itself, the dB figure is 0dB (the log of 1 is 0) — so the reference level described above was known as 0dBm — the 'm' referring to milliWatt.

Fortunately, the practice of terminating audio lines in 600Ω has long since been abandoned in (most) audio environments, but the reference voltage has remained in use. To differentiate between the old dBm (requiring 600Ω terminations) and the new system which doesn't require terminating, we use the descriptor: 0dBu — the 'u' meaning 'unterminated.' Thus, 0dBu means a voltage reference of 0.775V (rms), irrespective of load impedance. A lot of professional systems adopt a higher standard reference level of +4dBu, which is a voltage of 1.228V (rms).

Domestic and semi-professional equipment generally doesn't operate with such high signal levels as professional equipment, so a lower reference voltage was defined. This is -10dBV, where the 'V' suffix refers to 1Volt — hence the reference is 10dB below 1V, which is 0.316mV (rms).

It is often useful to know the difference between 0dBu and 0dBV, and those equipped with a scientific calculator will be able to figure it out easily enough. For everyone else, the difference is 8.8dB — and the difference between +4dBu and -10dBV is 11.8dB. So in round numbers, converting from semi-pro to pro levels requires 12dB of extra gain, and going the other way needs a 12dB pad.

The third common decibel term — dBFS — is used in digital systems. The only valid reference point in a digital system is the maximum quantising level or 'full scale' and this is what the 'FS' suffix relates to. So we have to count down from the top and signals usually have negative values.

To make life easier, we build in a headroom allowance, and define it in terms of a nominal working level. This is generally taken to be -18dBFS in Europe which equates to 0dBu when interfacing with analogue equipment, or -20dBFS in America which equates to +4dBu in analogue equipment.

Published October 2003

Saturday, June 15, 2019

Friday, June 14, 2019

Q. Do I need a dedicated FM soft synth?

By Craig Anderton

Native Instruments' FM7 soft synth is equipped with six operators, each with a 31-stage envelope which can be edited from this screen.

Native Instruments' FM7 soft synth is equipped with six operators, each with a 31-stage envelope which can be edited from this screen.

I'm too young to have experienced the first wave of FM synthesis, but I find those sounds very interesting. I was considering buying Native Instruments' FM7 soft synth, but I've noticed that many virtual analogue soft synths claim to offer FM capabilities, and provide virtual analogue besides. Is there any advantage to the extra expense of something like the FM7?

Richard Ledbetter

SOS Contributor Craig Anderton replies: If your interest in FM synthesis is at all serious, then yes, there are advantages to dedicated FM soft synths like Native Instruments' FM7. But to understand why, it might first be helpful to explore some FM synthesis basics.

The basic 'building block' of FM synthesis is called an operator. This consists of an oscillator (traditionally a sine wave, but other waveforms are sometimes used), a DCA to control its output, and an envelope to control the DCA. But the most important aspect is that the oscillator has a control input to vary its frequency.

Feeding one operator's output into another operator's control input creates complex modulations that generate 'side-band' frequencies. The operator being controlled is called the carrier; the one doing the modulation is called the modulator. The sideband frequencies are mathematically related to the modulator and carrier frequencies. (Note that the modulator signal needs to be in the audio range. If it's sub-audio, then varying the carrier's frequency simply creates vibrato.)

Operators can be arranged in many ways, each of which is called an algorithm. Basic Yamaha FM synths of the mid-80s had four operators. A simple algorithm suitable for creating classic organ sounds is to feed each operator's output to a summed audio output, in which case each operator is a carrier. A more complex algorithm might feed the output from two operators to the audio output, with each being modulated by one of the other operators. Or, Operator 1 might modulate Operator 2, which modulates Operator 3, which modulates Operator 4, which then feeds the audio output. The latter can produce extremely complex modulations. Sometimes feedback among operators is also possible, which makes things even more interesting.

So more operators means more algorithm options. The Yamaha DX7 had six operators and 32 fixed algorithms; FM7 actually offers considerably more power because it lets you create your own algorithms.

A virtual analogue synth that offers FM typically allows one oscillator to modulate another one, essentially serving as a primitive two-operator FM synth with one algorithm. While this is enough to let you experiment a bit with simple FM effects, the range of sounds is extremely limited compared to the real thing. You'll be able to get some nice clangy effects and maybe even some cool bass and brass sounds, but don't expect much more than that unless there are multiple operators and algorithms.

Published November 2003

Thursday, June 13, 2019

Wednesday, June 12, 2019

Voice Channels Explained

By Paul White & Sam Inglis

We answer some commonly-asked questions on the use of voice channels in recording.

TL Audio Ivory VP5051 voice channel.Voice channels are signal-processing boxes that combine mic and line preamplifiers with facilities such as EQ, compression, limiting and enhancement. However, not all models offer all the above features and, in addition, the preamp section may or may not include an instrument-level input with a high input impedance, suitable for use with electric guitars and basses. The popularity of voice channels is linked to the increased use of desktop computer-based music systems, many of which do away with the need for a traditional recording mixer. They're also useful in a more traditional recording environment where the operator works mainly by overdubbing single parts and where quality requirements dictate the use of a preamp section better than that provided in the mixing console.

TL Audio Ivory VP5051 voice channel.Voice channels are signal-processing boxes that combine mic and line preamplifiers with facilities such as EQ, compression, limiting and enhancement. However, not all models offer all the above features and, in addition, the preamp section may or may not include an instrument-level input with a high input impedance, suitable for use with electric guitars and basses. The popularity of voice channels is linked to the increased use of desktop computer-based music systems, many of which do away with the need for a traditional recording mixer. They're also useful in a more traditional recording environment where the operator works mainly by overdubbing single parts and where quality requirements dictate the use of a preamp section better than that provided in the mixing console.Q. Modern mixers tend to have very high-quality signal paths, so what's to be gained from using a voice channel?

Even a well-designed mixer has a fairly long and convoluted signal path, whereas a voice channel can be plugged directly into the input of the recorder, providing a much simpler and cleaner path. It's also the case that as a voice channel has only one preamp section, this is often more sophisticated than those you'd expect to find in a project studio mixer: it may, for example, have a much wider audio bandwidth, better noise performance, lower distortion and more headroom. Some models also feature valve or tube stages to add a little flattering tonal coloration to the sound.

There's less likelihood of introducing crosstalk or ground-loop hum when you have only a single voice channel patched into your recorder, especially if the recorder has balanced inputs, and because a voice channel includes other processing sections, it makes it possible to treat a signal prior to recording, if appropriate.

Q. In what situations is it best to add processing as you record?

Much depends on the type of recording system you have. In a traditional studio based around an analogue mixer and analogue tape machine, it is generally safest to record the signal completely flat or with just a small amount of compression. The reason is that if you do compress and equalise a signal while recording, then find it doesn't fit well with the rest of the mix, making the desired changes will be more difficult than if you started out with an unmodified signal.

In a digital recording situation where you are still using a normal mixer, the previous advice still applies, though if your voice channel has a separate limiter, you may want to use this to ensure that the recorder can never be overloaded by unexpected signal peaks. Few limiters are completely transparent in operation, but they all sound a lot better than digital clipping. Should your voice channel have a variable-ratio compressor but no limiter, you may be able to emulate limiting by setting the compressor to its highest ratio, then adjusting the threshold so that compression occurs just before digital full scale on the recorder's meters. You'll need to use the fastest attack time combined with a release time of around 200mS. Some compressor designs, particularly those based around optical devices, will however be too slow to catch transient peaks even using the fastest available attack time, so a separate limiter may prove necessary.

When working with a PC-based studio, the question of whether to process or not is a little more complex, because your system may only have stereo outputs, or it may have multiple outputs connected to a digital or analogue mixer. You may also have software plug-ins capable of high-quality compression, limiting, EQ, gating and so on.

As a rule, the processing available within an analogue voice channel is better than that provided by most low-cost plug-ins, though some of the more serious plug-ins are exceptionally good. For systems with stereo outputs, you won't have the chance to add any more analogue processing after recording, so the best bet is to process as you record. Even so, I find it's safest to use slightly less compression and EQ than you think you might ultimately need if you have plug-ins that can handle these tasks, as you can always call upon your plug-ins for fine-tuning when you come to mix. The comments about limiting also apply, so if your unit has a separate limiter, use it to prevent overloads. Similarly, if you don't need traditional compression when recording and you don't have a separate limiter, then use the compressor as a limiter as described earlier.

If your system has separate outputs feeding a conventional mixer, you can save your processing until the mixing stage, just as you might in a tape-based studio.

A few voice channels provide enhancement as an option; normally I'd only use an enhancer when mixing, but if you're working with a mic that has a poor high-end response, a gentle degree of enhancement can help produce a clearer recording.

Q. How can I use a voice channel when mixing?

If you're working with a conventional analogue mixer, you can use the processing section of the voice channel via the mixer insert points, just like any other processor. Simply feed the mixer insert send into the line input of the voice channel, then take the voice channel output back to the insert return. Most mixers have unbalanced insert points, so if your voice channel offers both balanced and unbalanced inputs, it may be best to use the unbalanced ones. Note that unbalanced connections are sometimes fixed at -10dBu sensitivity while the balanced connections are +4dBu.

Q. Are there any special gain structure considerations when using a voice channel?

When recording via a voice channel, you should always set the input gain trim so that the meters show a healthy reading. In most cases, this means that signal peaks will push the meter close to the top of its scale, but not far enough to cause the peak or clip indicator to come on. If there's an output meter that comes after the output level control, ensure that this is also producing a sensible reading. If you're recording directly from the voice channel, then you may have to use the output level control to set the record level, so be aware that digital equipment often requires much higher signal levels than analogue. In practice, many voice channels need to run with their output sections almost flat out to get enough level into the digital recorder. This is fine providing the voice channel has enough headroom and that no clip indicators light up.

A final point concerning gain structure is to take care how you use the compressor make-up gain control. This is provided so that you can compensate for any gain loss caused by compression, and perhaps the best way to adjust it is to use the compressor bypass switch to compare the maximum output meter readings (or your recorder's input meter if your voice channel has no output metering) with compression switched in and out. The peak signal levels should be similar both with and without compression, though the average level will be higher with compression. Adjust the compressor make-up gain until this is the case.

If you have a recorder that operates at a low level, such as -10dBu, and you find you have to turn the voice channel gain way down to get a sensible record level, then switch to using the voice channel's unbalanced output if it has one. Most recorders that operate at -10dBu will be unbalanced in any case.

Q. Can I record a guitar or bass directly via a voice channel?

A: You can record an active guitar or bass (those that require batteries) via any device that has a line input with enough gain. However, ordinary passive guitars and basses need to run into an input impedance of 500k(omega) or ideally much higher. Some voice-channel manufacturers include an instrument input for this application, in which case you can plug straight into it. If you don't have an instrument input, you can still use the line input via a suitable high-impedance DI box.

Don't expect the sound you get from a voice channel to be exactly the same as you'd get from a guitar amp — guitar amps have tailored frequency response curves to give them their characteristic sound, so you may need to use some EQ to get the sound just the way you want it. You can use compression to fatten up and even out both guitar and bass sounds, but be aware that using overdrive pedals while DI'ing is likely to produce a rather edgy sound because a voice channel doesn't have the low-pass filtering characteristics of a typical guitar speaker cab to smooth it out. You could of course use an amp simulator after the voice channel.

Q. Is it possible to use a voice channel in conjunction with a mixer without making the signal path even longer?

If you want to use the voice channel instead of one of the ordinary mixer channels, you could feed its output directly into the insert return of one of the console's groups. This will allow you to route the signal to the recorder without having to change any wiring, and it will also keep the signal path sensibly short. Most group insert points come before the group fader, so the record level will be affected by both the voice channel's output level setting and by the group fader position. It's probably safest to set the group fader at its 0dB position and then adjust the record level from the voice channel.

Q. I do all my recording and mixing on my computer. Will buying a voice channel allow me to do without a hardware mixer?

A voice channel will certainly allow you to get mic and instrument signals into your computer with decent sound quality, provided of course you have a reasonable soundcard. Even if you are using a voice channel for all your preamplification and processing, however, it may still be useful to have a mixer in your computer recording setup. If you have external MIDI sound sources, you will need some way of monitoring these in conjunction with the outputs from your computer, which will make at least a small mixer necessary.

Even if you don't use external MIDI sound sources, using a monitor mixer may help with latency when recording. Unless you have a 'zero-latency' soundcard, or one with very well-written drivers, you are likely to suffer a delay between putting a signal into your computer via the soundcard, and hearing the processed signal as it emerges from the computer again. This delay or 'latency' can make recording overdubs extremely difficult.

For more about avoiding latency in a computer-based setup, see SOS August 1999.

Q. Should I consider a voice channel with a digital output?

The obvious answer here is that you can only make use of a digital output if your recorder has a compatible digital input! The A-D converters in a good voice channel may well be better than those in a cheap recorder or soundcard. If your recorder or soundcard has indifferent A-D converters, but sports a digital audio input, then it may well be worth using the digital input and having your audio converted by a higher-spec A-D in your voice channel.

Q. Is there any practical difference between using a single stereo voice channel and two separate mono channels?

For stereo sources, you can just treat two separate voice channels, or the two channels of a dual-channel model, like separate mixer channels. However, some two-channel models allow the compressors to be linked for true-stereo operation, which keeps the stereo image more stable.

Published April 2000

Subscribe to:

Posts (Atom)