By John Walden

Amongst Cubase 9’s new features is a whole new track type dedicated to quick and easy sampling.

One of Cubase 9’s big headline new features is the Sampler Track, and it’s available in all three versions of Cubase (Pro, Artist and Elements). Compared with Steinberg’s HALion, or any ‘full-fat’ third-party sampler plug-in, the new Sampler Track is a little modest in terms of its actual sampling functionality, but it scores highly in terms of speed and ease of use, and its deep integration into your Cubase projects.

To explore some of the pros and cons in this first iteration of the Sampler Track, I’ll focus on one example: extracting hits from an audio drum loop. Cubase already offers plenty of scope for experimenting with pre-recorded drum loops — the Sample Editor, AudioWarp, HitPoints and Groove Agent SE features combine to form a powerful toolkit. So, in terms of creative workflow, let’s also consider what the Sampler Track brings to the party.

In the new multi-pane, single-window layout of Cubase 9, one of the four tabs in the Lower Zone is Sampler Control. The easiest way to create a new Sampler Track is to drag and drop an audio sample into the (initially empty) Sampler Control zone from an existing audio track, the MediaBay or even from the Finder/File Explorer of your host OS. This process will automatically create a Sampler Track and map your sample across a default C3 to C6 key range ready to be played via MIDI — it’s super-easy!

The Sampler Control zone is split into four key sections. The waveform display allows you to define the loop and sustain regions for your source sample, while the keyboard display allows you to constrain the MIDI note-mapping range. The key control in the top-most toolbar strip is the Loop Mode. There are various options here, but for our drum-hits example selecting ‘No Loop’ and enabling the One Shot button (the icon looks like a circular target) is a good start.

In No Loop mode, all you need do in the waveform display is set the start and end points of the drum hit by dragging the two white ‘S’ markers to the desired locations. Zoom controls (located bottom right of the waveform display) allow more precise control. The markers also include fade options and setting a short fade out can be useful to avoid glitches on playback. That said, you get more control over the amplitude of the sample’s playback by visiting the Amp envelope...

The tiny envelope icon (top-right of the Amp section) replaces the waveform display with a flexible envelope editor, where you can add multiple nodes to create precisely the shape you require. For the example, I’ve chosen the ‘One Shot’ envelope mode and emphasised the attack portion of the drum hit for a bit more impact.

Similar envelope editors are available for the Pitch and Filter sections, and you can get creative by adding just a little bit of pitch variation and tonal changes via the filter. The different filter types allow gentle overdrive through to more extreme bit crushing. Again, for use with isolated drum hits, setting the pitch and filter envelopes to One Shot mode is best and you can control the degree of envelope modulation via the AMT slider located far-left in each envelope editor panel.

In terms of crafting your drum hit, some other interesting options in the Sampler Control panel are worth exploring — the reverse (backwards ‘R’ icon) button, for example. However, for isolating an individual drum sound from your loop and then tweaking its character, this really is a super-simple process.

So, we’ve our isolated hit, but what next? For this example application at least, this is perhaps where the ‘first iteration’ of the Sampler Track feature becomes a little more obvious. I’ll focus on what’s possible within Cubase itself (HALion owners may have more options) and, in particular, how you might approach the most common situation, where you wish to extract several different individual drum sounds (eg. kick, snare, hi-hat, tom and crash) from a loop in order to build a basic ‘kit’ that can then be triggered via MIDI. Let’s look at the two most obvious possibilities, both of which work but have an element of clunkiness.

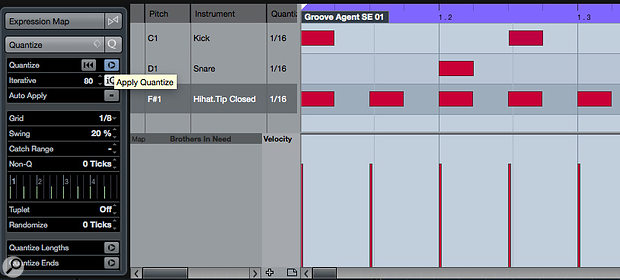

The Sampler Control Toolbar features a very interesting button called ‘Transfer to New Instrument’. Having defined your drum hit, click this to see options to export your selected sample (with all its settings) to a different virtual instrument plug-in. If you select Groove Agent (full version or SE) then, hey presto, a new instrument track is created for you, and your carefully crafted drum hit is inserted on an empty drum pad ready to play.

Great as this is for your first drum hit, though, if you go back to the Sampler Control section to define a second drum hit, when you repeat the Transfer to New Instrument process it’s placed in a second instance of Groove Agent. Currently, there’s no option to ‘add to existing instrument’ — one for the future, perhaps. That’s a bit of a shame from a workflow perspective, but a simple workaround is to drag and drop this second sample from the pad in your second Groove Agent instance onto an empty pad in your first, and then delete the superfluous Groove Agent Instrument Track. Yes, this a bit clunky, but it works and, importantly, it takes only a few seconds. Repeat as many times as required and you’ll soon have a Groove Agent ‘kit’ ready to play. While Groove Agent already includes similar sample editing features for creating individual hits from within a drum loop, I find the Sampler Control environment a much more inviting place to do this sort of quick-and-simple sample editing.

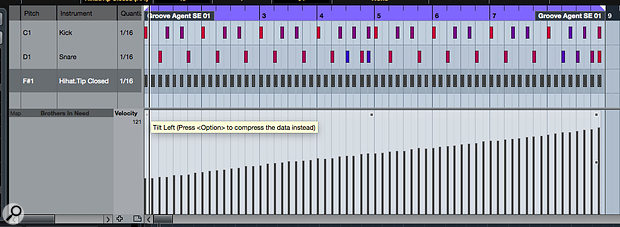

Another option is to create multiple Sampler Tracks (one for each drum hit/sound that’s required) based on different edits from the same original drum loop. This is easy to do but, again, a little clunky. First, note that you have to select all of the Sampler Tracks, and record-enable them, when you want to ‘play’ this kit from a single MIDI keyboard. Second, you’ll need to constrain the MIDI note mapping for each of your Sample Tracks so that each sound only responds to a unique range of MIDI pitches. This is easy to do via the keyboard display in the Sampler Control section for each track, though, and it does mean you can actually define each drum sound to be triggered by two or three keys. (An upside is that using multiple keys per sound can make playing rolls or fast hi-hat patterns much easier!)

Depending on how you set up the pitch behaviour (for example, if you don’t engage the Fix option, which causes all keys to trigger the sample at the same pitch), the fairly subtle shifts in pitch between adjacent notes can also be used to add useful variation to your performance. You end up with multiple Sampler Tracks to manage, of course, and, when you record your MIDI performances, you’ll create multiple (identical) MIDI parts. But if that bothers you, just hide all these tracks within a Folder Track.

There are a couple more general Sample Track ‘gotchas’ it’s worth being aware of. First, if you drag a new sample to an existing Sampler Control zone, the default behaviour is for it simply to replace the existing sample, so any existing (careful) editing will be lost — and there doesn’t appear to be an ‘are you sure?’ warning.

Second, note that any samples you drag and drop from outside the current project are not automatically added to your Project folder. Played on the same host system, these samples will be recalled when you reload your project but, if you want to move the project to another system, you will need to ensure you take these samples with you. The easiest way to do this is to execute the Media/Prepare Archive function as this will gather all the files referenced by the project and ensure they are placed in the project folder.

So, while there’s clearly scope for further development, the Sampler Track is already a very promising new feature and Steinberg deserve a big pat on the back for it — it’s a hugely creative feature that’s easy to get your head around, and allows you to work fast.