Welcome to No Limit Sound Productions. Where there are no limits! Enjoy your visit!

Welcome to No Limit Sound Productions

| Company Founded | 2005 |

|---|

| Overview | Our services include Sound Engineering, Audio Post-Production, System Upgrades and Equipment Consulting. |

|---|---|

| Mission | Our mission is to provide excellent quality and service to our customers. We do customized service. |

Tuesday, March 31, 2015

Monday, March 30, 2015

Q How does mastering differ for vinyl and digital releases?

Sound Advice : Recording

Obviously a vinyl record is a different thing from a CD or a WAV file, but does it require a separate, dedicated master, or are the two formats basically made from the same mastered file?

Eric James

Jasper King, via email

SOS contributor Eric James replies: This is a timely question — mastering for vinyl has recently gone from being a specialised rarity to a common extra that clients ask for in addition to the digital master. And it usually is that way around: a digital main release (CD or download) with a vinyl version, perhaps for a shorter run, for sale at gigs, and sometimes as part of a marketing plan. The proportion of projects that are primarily for vinyl release, secondarily digital, is very much less. I mention this because the format of the primary offering can sometimes make a difference.

The short answer to the question though is yes, sort of: separate masters are required for CD replication or digital distribution and vinyl records. However, in the majority of cases the mastering processing can be the same for both, as the crucial differences between them are practical (ie. the level and extent of limiting, the word length, and the sequencing of the files). A digital master for CD has to have a 16-bit word length, and it can be as loud and as limited as the client’s taste or insecurity dictates; with the vinyl master there is a physical limit to what can be fed to the cutting head of the lathe, and so heavily clipped masters are not welcome and can only be accommodated, if at all, by serious level reduction. For vinyl, the optimum source is 24-bit, dynamic, and limited either extremely lightly or not at all. The sequencing difference is that delivery from mastering for digital is either individual WAV files for download or a single DDPi file for CD replication, whereas for vinyl the delivery is generally two WAV files, one for each side of the record.

For the most part, the mastering process for vinyl and digital formats can be the same — and any guesswork around things like the stereo spread of bass frequencies is probably best left for the cutting engineer.For the most part, the mastering process for vinyl and digital formats can be the same — and any guesswork around things like the stereo spread of bass frequencies is probably best left for the cutting engineer.Photo: JacoTen / Wikimedia CommonsThis is how it generally works at my own, pretty typical, facility. We run the mastering processing through the analogue chain, gain-staging so that the final capture is a louder but, as yet, unlimited version of the master. To this we can subsequently add level and required limiting. In the simplest scenario, then, this as-yet unlimited version can serve as the vinyl master, and a different version, which has had gain added, becomes the digital master. This works best when the primary focus is the vinyl, as the louder digital version benefits from the preserved dynamics in the vinyl master.

Things can get more tricky if the primary focus is the digital master, and especially when that is required to be fairly loud. You can’t simply take an unlimited file and add 4 or 6 dB of limiting without sonic consequences, and so for loud CD masters, we normally add another step of gain-staging and include some light limiting during the initial processing run, the result being a louder master to begin with for the second stage of adding gain.

This is not always the way the issue is presented: there is sometimes talk of different EQ settings and the use of elliptical filters and whatnot. The fact is, though, that the EQ considerations offered as being necessary for vinyl are pretty much a desiderata for most decent digital masters too. For example, extreme sibilance, often mentioned, certainly is a problem for vinyl, but then it’s hardly desirable for CD playback either. Another myth is the ‘bass width’. I’ve been told by alleged label experts that (and here I quote) “vinyl masters need to be mono in low frequencies, and the low end, like 80-200 [Hz], almost mono.” If this were true, it would make you wonder how classic orchestral recordings (which have the double basses well off-centre to the far right) ever managed to get cut to vinyl! The fact is that wildly out-of-phase and excessive bass can be problematic, and if it’s present in a mix, a certain amount of taming will be needed in the mastering stage. But even then, if the master is going to a reputable cutter it is best to leave that decision to them.

Obviously a vinyl record is a different thing from a CD or a WAV file, but does it require a separate, dedicated master, or are the two formats basically made from the same mastered file?

Eric James

Jasper King, via email

SOS contributor Eric James replies: This is a timely question — mastering for vinyl has recently gone from being a specialised rarity to a common extra that clients ask for in addition to the digital master. And it usually is that way around: a digital main release (CD or download) with a vinyl version, perhaps for a shorter run, for sale at gigs, and sometimes as part of a marketing plan. The proportion of projects that are primarily for vinyl release, secondarily digital, is very much less. I mention this because the format of the primary offering can sometimes make a difference.

The short answer to the question though is yes, sort of: separate masters are required for CD replication or digital distribution and vinyl records. However, in the majority of cases the mastering processing can be the same for both, as the crucial differences between them are practical (ie. the level and extent of limiting, the word length, and the sequencing of the files). A digital master for CD has to have a 16-bit word length, and it can be as loud and as limited as the client’s taste or insecurity dictates; with the vinyl master there is a physical limit to what can be fed to the cutting head of the lathe, and so heavily clipped masters are not welcome and can only be accommodated, if at all, by serious level reduction. For vinyl, the optimum source is 24-bit, dynamic, and limited either extremely lightly or not at all. The sequencing difference is that delivery from mastering for digital is either individual WAV files for download or a single DDPi file for CD replication, whereas for vinyl the delivery is generally two WAV files, one for each side of the record.

For the most part, the mastering process for vinyl and digital formats can be the same — and any guesswork around things like the stereo spread of bass frequencies is probably best left for the cutting engineer.For the most part, the mastering process for vinyl and digital formats can be the same — and any guesswork around things like the stereo spread of bass frequencies is probably best left for the cutting engineer.Photo: JacoTen / Wikimedia CommonsThis is how it generally works at my own, pretty typical, facility. We run the mastering processing through the analogue chain, gain-staging so that the final capture is a louder but, as yet, unlimited version of the master. To this we can subsequently add level and required limiting. In the simplest scenario, then, this as-yet unlimited version can serve as the vinyl master, and a different version, which has had gain added, becomes the digital master. This works best when the primary focus is the vinyl, as the louder digital version benefits from the preserved dynamics in the vinyl master.

Things can get more tricky if the primary focus is the digital master, and especially when that is required to be fairly loud. You can’t simply take an unlimited file and add 4 or 6 dB of limiting without sonic consequences, and so for loud CD masters, we normally add another step of gain-staging and include some light limiting during the initial processing run, the result being a louder master to begin with for the second stage of adding gain.

This is not always the way the issue is presented: there is sometimes talk of different EQ settings and the use of elliptical filters and whatnot. The fact is, though, that the EQ considerations offered as being necessary for vinyl are pretty much a desiderata for most decent digital masters too. For example, extreme sibilance, often mentioned, certainly is a problem for vinyl, but then it’s hardly desirable for CD playback either. Another myth is the ‘bass width’. I’ve been told by alleged label experts that (and here I quote) “vinyl masters need to be mono in low frequencies, and the low end, like 80-200 [Hz], almost mono.” If this were true, it would make you wonder how classic orchestral recordings (which have the double basses well off-centre to the far right) ever managed to get cut to vinyl! The fact is that wildly out-of-phase and excessive bass can be problematic, and if it’s present in a mix, a certain amount of taming will be needed in the mastering stage. But even then, if the master is going to a reputable cutter it is best to leave that decision to them.

Saturday, March 28, 2015

Friday, March 27, 2015

Q Can I feed my monitors from my audio interface’s headphone output?

Sound Advice : Recording

I want to add another set of monitors for A/B referencing, but my interface has only one set of monitor outputs. I don’t want to degrade the signal in any way. Is it possible to simply use a splitter adaptor to separate the stereo channels from the headphone output into a left and right output to the monitors (without any buzz or hum)? I don’t really need the switching facility as I’d use software for that.

Hugh Robjohns

Via SOS forum

Your interface’s headphone output can be used to feed audio to your monitor speakers, but it’s unlikely to be the best option.Your interface’s headphone output can be used to feed audio to your monitor speakers, but it’s unlikely to be the best option.SOS Technical Editor Hugh Robjohns replies: You can do that but it is an unbalanced signal, so the likelihood of ground-loop hums and buzzes is inevitably quite high. Headphone outputs also tend to be noisier and often suffer higher distortion than dedicated line outputs. (At the end of the day, it’s a small power-amp stage rather than a proper line driver.)

There are plenty of ready-made balanced line-level switch boxes on the market, though (try Coleman, ART or Radial, for example). However, most such products seem surprisingly expensive for such a simple device — so it might be worth considering investing in an affordable passive monitor controller, which can cost very little more. I know you said that you’d manage the selection via your software, but in the event of an error on your system resulting in full-scale digital noise being sent to the monitors, it’s handy to have a hardware volume control or mute button to hand!

I want to add another set of monitors for A/B referencing, but my interface has only one set of monitor outputs. I don’t want to degrade the signal in any way. Is it possible to simply use a splitter adaptor to separate the stereo channels from the headphone output into a left and right output to the monitors (without any buzz or hum)? I don’t really need the switching facility as I’d use software for that.

Hugh Robjohns

Via SOS forum

Your interface’s headphone output can be used to feed audio to your monitor speakers, but it’s unlikely to be the best option.Your interface’s headphone output can be used to feed audio to your monitor speakers, but it’s unlikely to be the best option.SOS Technical Editor Hugh Robjohns replies: You can do that but it is an unbalanced signal, so the likelihood of ground-loop hums and buzzes is inevitably quite high. Headphone outputs also tend to be noisier and often suffer higher distortion than dedicated line outputs. (At the end of the day, it’s a small power-amp stage rather than a proper line driver.)

There are plenty of ready-made balanced line-level switch boxes on the market, though (try Coleman, ART or Radial, for example). However, most such products seem surprisingly expensive for such a simple device — so it might be worth considering investing in an affordable passive monitor controller, which can cost very little more. I know you said that you’d manage the selection via your software, but in the event of an error on your system resulting in full-scale digital noise being sent to the monitors, it’s handy to have a hardware volume control or mute button to hand!

Thursday, March 26, 2015

Wednesday, March 25, 2015

Tuesday, March 24, 2015

Q What’s the best way to hook up my hardware to my DAW?

Sound Advice : Recording

I’m hoping you can help me use my hardware with my computer! I’ve acquired a few instruments (synths, drum machines and so on) and some outboard gear too (some old Aphex, Dbx and Drawmer compressors, a TL Audio EQ, and some Alesis and Yamaha reverbs, and I’ve also got a load of guitar effects pedals). My current setup is based around a Samsung laptop running a copy of Reaper, and a Focusrite Saffire Pro 40 interface. Ideally, I’d like to be able to use all of these bits of gear whenever I want, without having to keep plugging in and unplugging all the cables. What’s the best way to do this?

Matt Houghton

Jeff Foyle, via email

Reviews Editor Matt Houghton replies: I explained a lot of this more generally in an article about ‘hybrid studios’ back in SOS May 2010 (http://sosm.ag/hybrid-systems), and it’s well worth reading that if you want some general pointers. But let’s look at your setup more specifically — and the first thing to say is that it sounds like you’ve got more gear than your interface can cope with simultaneously. This means that you’re either going to need to invest in some gear that gives you more inputs and outputs, or you need to put up with plugging and unplugging things.

The Saffire Pro 40 offers eight analogue inputs and 10 analogue outputs (I’ll use I/O in place of ‘inputs and outputs’ from here on). You’re going to need two of those outputs to cater for your stereo bus (ie. your main monitor mix). That leaves you with eight analogue I/O, which is enough to hook up eight mono outboard processors (each of which takes up an input and an output), four stereo processors (each of which takes up two inputs and outputs), or some combination of stereo and mono units. If you also need to hook up mics and synths, each of which takes up an input, that doesn’t leave you with much room for outboard.

There are a few different ways to tackle this. If ease of routing is your main concern but you don’t need to use all these synths, processors and effects at once, then the traditional approach is to use a patchbay: you hook up your interface’s I/O and all your gear’s I/O to the patchbay, and use patch cables to route one item to another. Obviously you’re still plugging and unplugging, but it’s a lot easier than having to plug and unplug cables from the back of all your gear, particularly if it’s rackmounted and awkward to get behind the rack! Hugh Robjohns wrote a useful piece detailing ways to organise your patch bay in SOS December 1999 (http://sosm.ag/patchbays).

The humble patchbay has been taking the hassle out of hardware routing for decades, and remains the most effective way of connecting an abundance of hardware to the limited I/O of your audio interface.The humble patchbay has been taking the hassle out of hardware routing for decades, and remains the most effective way of connecting an abundance of hardware to the limited I/O of your audio interface.Photo: Hannes BeigerIf you need to be able to access all of your gear from your DAW at the same time, though, you’ll either need a new interface or to expand your Pro 40’s analogue I/O count via its ADAT (and possibly S/PDIF) digital I/O courtesy of some external A-D/D-A converter boxes. It’s possible in this way to add another 10 analogue I/O in total, but note that you’ll be limited to operating at 44.1 or 48 kHz if you do so — that’s a limitation of using eight ADAT channels over one optical connection. For example, you might add a Behringer ADA8200 via ADAT to give you another eight I/O, and you might have a reverb box that can be hooked directly up to the interface’s S/PDIF I/O (I used to hook up a TC Electronic M300 in this way — that and its replacement, the M350, are excellent little boxes for the money!).

You also have the option of investing in a new interface altogether, and there are plenty that offer more analogue I/O or are more expandable. It’s just a question of budget, really. If you do so, note that your Saffire Pro 40 can be operated in ‘stand-alone mode’ (details can be found on the Focusrite web site). This means that you could purchase an interface with dual ADAT ports — such as the Liquid Saffire 56, for example — use your current interface as an ADAT expander to give you eight extra channels, and buy another one, like the Behringer unit mentioned above, to give you 24 and 26 analogue inputs and outputs respectively — and the ability to add two more of each via S/PDIF. An alternative would be to buy an interface that offers lots of I/O in one box.

It struck me, though, that you’re less likely in some scenarios to need to access all of your synths simultaneously than you are your processors, simply because you can record their outputs as audio. So you might find that hooking up a small mixer to your interface to manage your recording inputs frees up enough I/O to accommodate most of your processors without having to expand things to the extent described above.

The other problem you raise is that you wish to hook up guitar effects to your interface, and this raises the issues of signal level and impedance. Most guitar effects pedals are designed to work with guitar-level signals, whereas your interface is designed to operate for the most part with mic and line-level signals. There are two inputs that offer high-impedance instrument inputs which are suitable for DI’ing your guitar, but no outputs suitable for re-amping. This may or may not be a problem — it depends what you’re trying to do. The ‘full fat’ solution is to use a re-amp box, which takes the line-level output for your interface and converts it to the level and impedance that your amp and effects expect to see. Yet, while that’s pretty much a necessity for routing things to a guitar amp, it’s often less so for pedals, simply because you’re more than likely wanting to use them to ‘dirty things up’ a bit, rather than to perform exactly as they would when using them with a guitar. If that’s the case, as long as you can supply them with a signal at a suitable level and they sound how you’d like them to sound, then you’re good to go. I’ve used one side of an interface’s headphone output for this in the past, simply because it has a handy volume control and sends an unbalanced signal out to the unbalanced effects pedal. Coming up with a system whereby you don’t have to keep re-patching your guitar effects is going to be tricky, though, and if it’s a permanent studio setup, then it might be worthwhile setting up a dedicated unbalanced patchbay for them. But if you’re wanting to take them out for live use, I’d prefer to patch them in as and when needed — it’s not so inconvenient as reaching around the back of the rack.

I’m hoping you can help me use my hardware with my computer! I’ve acquired a few instruments (synths, drum machines and so on) and some outboard gear too (some old Aphex, Dbx and Drawmer compressors, a TL Audio EQ, and some Alesis and Yamaha reverbs, and I’ve also got a load of guitar effects pedals). My current setup is based around a Samsung laptop running a copy of Reaper, and a Focusrite Saffire Pro 40 interface. Ideally, I’d like to be able to use all of these bits of gear whenever I want, without having to keep plugging in and unplugging all the cables. What’s the best way to do this?

Matt Houghton

Jeff Foyle, via email

Reviews Editor Matt Houghton replies: I explained a lot of this more generally in an article about ‘hybrid studios’ back in SOS May 2010 (http://sosm.ag/hybrid-systems), and it’s well worth reading that if you want some general pointers. But let’s look at your setup more specifically — and the first thing to say is that it sounds like you’ve got more gear than your interface can cope with simultaneously. This means that you’re either going to need to invest in some gear that gives you more inputs and outputs, or you need to put up with plugging and unplugging things.

The Saffire Pro 40 offers eight analogue inputs and 10 analogue outputs (I’ll use I/O in place of ‘inputs and outputs’ from here on). You’re going to need two of those outputs to cater for your stereo bus (ie. your main monitor mix). That leaves you with eight analogue I/O, which is enough to hook up eight mono outboard processors (each of which takes up an input and an output), four stereo processors (each of which takes up two inputs and outputs), or some combination of stereo and mono units. If you also need to hook up mics and synths, each of which takes up an input, that doesn’t leave you with much room for outboard.

There are a few different ways to tackle this. If ease of routing is your main concern but you don’t need to use all these synths, processors and effects at once, then the traditional approach is to use a patchbay: you hook up your interface’s I/O and all your gear’s I/O to the patchbay, and use patch cables to route one item to another. Obviously you’re still plugging and unplugging, but it’s a lot easier than having to plug and unplug cables from the back of all your gear, particularly if it’s rackmounted and awkward to get behind the rack! Hugh Robjohns wrote a useful piece detailing ways to organise your patch bay in SOS December 1999 (http://sosm.ag/patchbays).

The humble patchbay has been taking the hassle out of hardware routing for decades, and remains the most effective way of connecting an abundance of hardware to the limited I/O of your audio interface.The humble patchbay has been taking the hassle out of hardware routing for decades, and remains the most effective way of connecting an abundance of hardware to the limited I/O of your audio interface.Photo: Hannes BeigerIf you need to be able to access all of your gear from your DAW at the same time, though, you’ll either need a new interface or to expand your Pro 40’s analogue I/O count via its ADAT (and possibly S/PDIF) digital I/O courtesy of some external A-D/D-A converter boxes. It’s possible in this way to add another 10 analogue I/O in total, but note that you’ll be limited to operating at 44.1 or 48 kHz if you do so — that’s a limitation of using eight ADAT channels over one optical connection. For example, you might add a Behringer ADA8200 via ADAT to give you another eight I/O, and you might have a reverb box that can be hooked directly up to the interface’s S/PDIF I/O (I used to hook up a TC Electronic M300 in this way — that and its replacement, the M350, are excellent little boxes for the money!).

You also have the option of investing in a new interface altogether, and there are plenty that offer more analogue I/O or are more expandable. It’s just a question of budget, really. If you do so, note that your Saffire Pro 40 can be operated in ‘stand-alone mode’ (details can be found on the Focusrite web site). This means that you could purchase an interface with dual ADAT ports — such as the Liquid Saffire 56, for example — use your current interface as an ADAT expander to give you eight extra channels, and buy another one, like the Behringer unit mentioned above, to give you 24 and 26 analogue inputs and outputs respectively — and the ability to add two more of each via S/PDIF. An alternative would be to buy an interface that offers lots of I/O in one box.

It struck me, though, that you’re less likely in some scenarios to need to access all of your synths simultaneously than you are your processors, simply because you can record their outputs as audio. So you might find that hooking up a small mixer to your interface to manage your recording inputs frees up enough I/O to accommodate most of your processors without having to expand things to the extent described above.

The other problem you raise is that you wish to hook up guitar effects to your interface, and this raises the issues of signal level and impedance. Most guitar effects pedals are designed to work with guitar-level signals, whereas your interface is designed to operate for the most part with mic and line-level signals. There are two inputs that offer high-impedance instrument inputs which are suitable for DI’ing your guitar, but no outputs suitable for re-amping. This may or may not be a problem — it depends what you’re trying to do. The ‘full fat’ solution is to use a re-amp box, which takes the line-level output for your interface and converts it to the level and impedance that your amp and effects expect to see. Yet, while that’s pretty much a necessity for routing things to a guitar amp, it’s often less so for pedals, simply because you’re more than likely wanting to use them to ‘dirty things up’ a bit, rather than to perform exactly as they would when using them with a guitar. If that’s the case, as long as you can supply them with a signal at a suitable level and they sound how you’d like them to sound, then you’re good to go. I’ve used one side of an interface’s headphone output for this in the past, simply because it has a handy volume control and sends an unbalanced signal out to the unbalanced effects pedal. Coming up with a system whereby you don’t have to keep re-patching your guitar effects is going to be tricky, though, and if it’s a permanent studio setup, then it might be worthwhile setting up a dedicated unbalanced patchbay for them. But if you’re wanting to take them out for live use, I’d prefer to patch them in as and when needed — it’s not so inconvenient as reaching around the back of the rack.

Monday, March 23, 2015

Saturday, March 21, 2015

Q Can you recommend a realistic vocal synth or sample library?

John Walden

I’ve been trying to find a keyboard, sample library, plug-in or synth that provides superb vocalisations for ‘oohs’ and ‘aahs’ in particular, but that might supply other vocalisations that are truly useful in recording. I can’t find or afford to hire outstanding background vocalists, yet the ‘ooh’ and ‘aah’ patches on my many synths simply don’t sound real. Is there anything you can recommend? Roger Cloud, via email

Quantum Leap Symphonic Choirs may not be the newest of vocal sample libraries, but it remains one of the best.Quantum Leap Symphonic Choirs may not be the newest of vocal sample libraries, but it remains one of the best.SOS contributor John Walden replies: If any instrument can reveal the artificial nature of synthesis or sampling, then it’s the human voice, but thankfully there are some very good vocal sample libraries. The downside is that the really good ones — those that will get you close enough to the real thing as to be virtually indistinguishable — don’t come cheap. EastWest’s Quantum Leap Symphonic Choirs remains impressive, and the even older Spectrasonics Vocal Planet and Symphony Of Voices libraries also sound great. If you want ‘oohs’ and ‘aahs’, all the above offer plenty of choice.

An alternative approach might be to see if you can pick up a Vocaloid library (we’ve reviewed a few of these over the years). This software uses a different approach based on voice synthesis rather than samples, and while the concept was ambitious the technology perhaps never really quite lived up to the hype, a little practice should have you generating decent background vocals.

Of course, the other option is to use a mic and record some DIY ‘oohs’ and ‘aahs’ of your own, and then beef them up using one of the many software processes that can turn a single voice into a small ensemble of voices. iZotope’s Nectar vocal production suite and Zplane’s Vielklang are pretty good tools for this.

I’ve been trying to find a keyboard, sample library, plug-in or synth that provides superb vocalisations for ‘oohs’ and ‘aahs’ in particular, but that might supply other vocalisations that are truly useful in recording. I can’t find or afford to hire outstanding background vocalists, yet the ‘ooh’ and ‘aah’ patches on my many synths simply don’t sound real. Is there anything you can recommend? Roger Cloud, via email

Quantum Leap Symphonic Choirs may not be the newest of vocal sample libraries, but it remains one of the best.Quantum Leap Symphonic Choirs may not be the newest of vocal sample libraries, but it remains one of the best.SOS contributor John Walden replies: If any instrument can reveal the artificial nature of synthesis or sampling, then it’s the human voice, but thankfully there are some very good vocal sample libraries. The downside is that the really good ones — those that will get you close enough to the real thing as to be virtually indistinguishable — don’t come cheap. EastWest’s Quantum Leap Symphonic Choirs remains impressive, and the even older Spectrasonics Vocal Planet and Symphony Of Voices libraries also sound great. If you want ‘oohs’ and ‘aahs’, all the above offer plenty of choice.

An alternative approach might be to see if you can pick up a Vocaloid library (we’ve reviewed a few of these over the years). This software uses a different approach based on voice synthesis rather than samples, and while the concept was ambitious the technology perhaps never really quite lived up to the hype, a little practice should have you generating decent background vocals.

Of course, the other option is to use a mic and record some DIY ‘oohs’ and ‘aahs’ of your own, and then beef them up using one of the many software processes that can turn a single voice into a small ensemble of voices. iZotope’s Nectar vocal production suite and Zplane’s Vielklang are pretty good tools for this.

Friday, March 20, 2015

Thursday, March 19, 2015

Q Can I make a 'Subkick' mic from any speaker cone?

Hugh Robjohns

Yamaha no longer make their Subkick drum mic, so I was thinking of making my own from an old loudspeaker. Does it have to be an NS10 driver? I also wondered whether a bigger woofer would allow me to capture more sub-bass?

Rob Glass, via email

SOS Technical Editor Hugh Robjohns replies: The short answers are no, and not in the way you think it should! Most people think the Subkick works like any other kick-drum microphone, but that it somehow captures mystical ‘sub’ frequencies from the kick-drum that other mics just don’t hear. After all, it’s so big and all those other kick-drum mics are puny little things, so they can’t possibly capture low frequencies which we know are big because they have huge wavelengths.

However, microphone diaphragm size is completely irrelevant when it comes to a microphone’s LF response (it can affect the HF response though). The reason is that a microphone works as a pressure (or pressure-gradient) detector to sense the changing air pressure at a point in space. It doesn’t matter how slowly the air pressure changes, or how big or small the pressure change is (within reason). Small-diaphragm omnidirectional (pressure-operated) microphones can be built very easily with a completely flat response down to single figures of Hz, if required, and most omnis are flat to below 20Hz. Directional (pressure-gradient) mics aren’t quite so easy to engineer for extreme LF performance (because of the mechanics involved in a pressure-gradient capsule), but a response flat to below 40Hz is not unusual. The fundamental of a kick drum is generally in the 60 to 90 Hz region, so well within the capability of any conventional mic.

It can certainly be useful, but does Yamaha’s classic Subkick have more in common with a synth than a conventional microphone?It can certainly be useful, but does Yamaha’s classic Subkick have more in common with a synth than a conventional microphone?The confusion and misplaced assumption about needing a large-diaphragm probably comes from the fact that a large diaphragm (cone) is required to move sufficient air to generate useful acoustic power at low frequencies from a loudspeaker. But the physics and mechanics involved are entirely different and simply don’t translate to the operating principles of a microphone.

Returning to the Subkick idea, basically what is on offer is a relatively high-mass diaphragm (cone) with a huge copper coil glued on the back, adding more mass and inertia. The whole assembly is horrendously under-damped and almost completely uncontrolled. Not surprisingly, when employed as a microphone it has what under normal circumstances would be an appalling frequency response, completely lacking in high-end. What is important, though, is the very poor damping, because this means that the diaphragm (cone) will tend to vibrate at its own natural resonant frequency when stimulated by a passing gust of wind — such as you get from a kick drum. So really, the Subkick isn’t capturing the kick drum’s mystical subsonic LF at all — it’s basically generating its own sound. In other words, what we actually have is an air-actuated sound synthesizer, not an accurate microphone!

Sure, the initial kick-drum transient starts the diaphragm in motion and the coil will generate an output voltage that approximates to the acoustic pressure wave (but with a very poor transient response). But after that initial impulse of air has passed, the diaphragm will try to return to its rest position, oscillating back and forth until the energy has been dissipated in the cone’s suspension. It is this ‘decaying wobble’ that generates the extended low-frequency output signal that most assume to be the mystical lost sub-harmonics of the kick drum. In reality, the output signal of the Subkick is not related to the kick drum’s harmonic structure in any meaningful way at all — it’s overwhelmingly dominated by the natural free-air resonance characteristics of the loudspeaker driver.

Now it just so happens that the size and natural free-air resonance of the NS10 driver is about right for resonating with the fundamental of a typical kick drum, thus delivering a nice low-end boom that complements the sound of some kick drums rather well.

I suspect that most people use Subkicks either because they’ve read about or seen someone they admire do it, or because the kick drum they want to record isn’t delivering the sound they need, want or expect. The latter is usually because it’s an inappropriate type of kick drum, it hasn’t been tuned or damped properly, it isn’t being played very well, or a standard mic hasn’t been placed very well. Having said that, if a Subkick approach creates a useful sound component that helps with the mix, that’s fine — just don’t run away with the idea that it’s capturing something real that others mics have missed!

Returning to the question of which speakers to use, the answer is whatever delivers the kind of sound you’re looking for! As I said, the NS10 driver just happens to have a free-air resonance at the right sort of frequency (and low damping) to resonate nicely in front of a kick drum. Early adopters placed the driver directly in front of the kick drum, open to the air on both sides, but Yamaha’s commercial version actually placed the driver inside what was effectively a snare-drum shell. Just like a speaker cabinet, the enclosure alters the natural resonance frequency and the damping characteristics of the driver, changing its performance (and tonality) substantially.

So, if you want to make your own Subkick, you need to look for a bass driver with a suitable free-air resonance characteristic and damping to generate an appropriate output signal. If you plan to build it into a cabinet, the same kinds of speaker-design calculations will apply to deriving the appropriate size of enclosure and tuning. In practice, trial and error works just as well and can be good fun, especially if you can pick up some cheap old hi-fi speakers with blown tweeters from a car boot sale!

To wire the thing up, you simply need to connect the hot and cold wires of a balanced mic cable to the hot and cold terminals of the speaker, and the cable screen can optionally be connected to the metal chassis. Assuming neither voice-coil terminal is shorted to the metal chassis, it should be fine with phantom power. The output from most speakers used in this way is usually pretty hot, though, so, at the very least, set the preamp for minimum gain, and expect to use the pad. In many cases you’ll need an external 20dB in-line XLR attenuator.

Yamaha no longer make their Subkick drum mic, so I was thinking of making my own from an old loudspeaker. Does it have to be an NS10 driver? I also wondered whether a bigger woofer would allow me to capture more sub-bass?

Rob Glass, via email

SOS Technical Editor Hugh Robjohns replies: The short answers are no, and not in the way you think it should! Most people think the Subkick works like any other kick-drum microphone, but that it somehow captures mystical ‘sub’ frequencies from the kick-drum that other mics just don’t hear. After all, it’s so big and all those other kick-drum mics are puny little things, so they can’t possibly capture low frequencies which we know are big because they have huge wavelengths.

However, microphone diaphragm size is completely irrelevant when it comes to a microphone’s LF response (it can affect the HF response though). The reason is that a microphone works as a pressure (or pressure-gradient) detector to sense the changing air pressure at a point in space. It doesn’t matter how slowly the air pressure changes, or how big or small the pressure change is (within reason). Small-diaphragm omnidirectional (pressure-operated) microphones can be built very easily with a completely flat response down to single figures of Hz, if required, and most omnis are flat to below 20Hz. Directional (pressure-gradient) mics aren’t quite so easy to engineer for extreme LF performance (because of the mechanics involved in a pressure-gradient capsule), but a response flat to below 40Hz is not unusual. The fundamental of a kick drum is generally in the 60 to 90 Hz region, so well within the capability of any conventional mic.

It can certainly be useful, but does Yamaha’s classic Subkick have more in common with a synth than a conventional microphone?It can certainly be useful, but does Yamaha’s classic Subkick have more in common with a synth than a conventional microphone?The confusion and misplaced assumption about needing a large-diaphragm probably comes from the fact that a large diaphragm (cone) is required to move sufficient air to generate useful acoustic power at low frequencies from a loudspeaker. But the physics and mechanics involved are entirely different and simply don’t translate to the operating principles of a microphone.

Returning to the Subkick idea, basically what is on offer is a relatively high-mass diaphragm (cone) with a huge copper coil glued on the back, adding more mass and inertia. The whole assembly is horrendously under-damped and almost completely uncontrolled. Not surprisingly, when employed as a microphone it has what under normal circumstances would be an appalling frequency response, completely lacking in high-end. What is important, though, is the very poor damping, because this means that the diaphragm (cone) will tend to vibrate at its own natural resonant frequency when stimulated by a passing gust of wind — such as you get from a kick drum. So really, the Subkick isn’t capturing the kick drum’s mystical subsonic LF at all — it’s basically generating its own sound. In other words, what we actually have is an air-actuated sound synthesizer, not an accurate microphone!

Sure, the initial kick-drum transient starts the diaphragm in motion and the coil will generate an output voltage that approximates to the acoustic pressure wave (but with a very poor transient response). But after that initial impulse of air has passed, the diaphragm will try to return to its rest position, oscillating back and forth until the energy has been dissipated in the cone’s suspension. It is this ‘decaying wobble’ that generates the extended low-frequency output signal that most assume to be the mystical lost sub-harmonics of the kick drum. In reality, the output signal of the Subkick is not related to the kick drum’s harmonic structure in any meaningful way at all — it’s overwhelmingly dominated by the natural free-air resonance characteristics of the loudspeaker driver.

Now it just so happens that the size and natural free-air resonance of the NS10 driver is about right for resonating with the fundamental of a typical kick drum, thus delivering a nice low-end boom that complements the sound of some kick drums rather well.

I suspect that most people use Subkicks either because they’ve read about or seen someone they admire do it, or because the kick drum they want to record isn’t delivering the sound they need, want or expect. The latter is usually because it’s an inappropriate type of kick drum, it hasn’t been tuned or damped properly, it isn’t being played very well, or a standard mic hasn’t been placed very well. Having said that, if a Subkick approach creates a useful sound component that helps with the mix, that’s fine — just don’t run away with the idea that it’s capturing something real that others mics have missed!

Returning to the question of which speakers to use, the answer is whatever delivers the kind of sound you’re looking for! As I said, the NS10 driver just happens to have a free-air resonance at the right sort of frequency (and low damping) to resonate nicely in front of a kick drum. Early adopters placed the driver directly in front of the kick drum, open to the air on both sides, but Yamaha’s commercial version actually placed the driver inside what was effectively a snare-drum shell. Just like a speaker cabinet, the enclosure alters the natural resonance frequency and the damping characteristics of the driver, changing its performance (and tonality) substantially.

So, if you want to make your own Subkick, you need to look for a bass driver with a suitable free-air resonance characteristic and damping to generate an appropriate output signal. If you plan to build it into a cabinet, the same kinds of speaker-design calculations will apply to deriving the appropriate size of enclosure and tuning. In practice, trial and error works just as well and can be good fun, especially if you can pick up some cheap old hi-fi speakers with blown tweeters from a car boot sale!

To wire the thing up, you simply need to connect the hot and cold wires of a balanced mic cable to the hot and cold terminals of the speaker, and the cable screen can optionally be connected to the metal chassis. Assuming neither voice-coil terminal is shorted to the metal chassis, it should be fine with phantom power. The output from most speakers used in this way is usually pretty hot, though, so, at the very least, set the preamp for minimum gain, and expect to use the pad. In many cases you’ll need an external 20dB in-line XLR attenuator.

Wednesday, March 18, 2015

Q Do laptops sound worse when using an AC adapter?

Hugh Robjohns

A mastering engineer told me that, when playing back source files from his laptop into a high-quality USB-connected D-A, the masters sounded ‘worse’ if the laptop was plugged into its power supply, as opposed to running off the battery. He sent me ‘with and without’ comparisons and there was indeed quite a difference. He then went on to test the source files running from various USB, Firewire and internal hard drives, and maintains that internal drives for record or playback in real time (ie. not a data transfer) sound better than either USB or Firewire. What could be going on here?

SOS forum post

SOS Technical Editor Hugh Robjohns replies: I’ve heard many similar claims — in particular that USB thumb drives sound better than external hard-disk drives — and have experienced the same thing myself on occasion. I suspect the answer lies in our old nemesis, the humble ground loop.

Ground loops are formed when multiple devices are interconnected in such a way that their local ground references are at slightly different voltages, and a current can therefore flow around a loop from one device’s mains safety ground, through the connecting cables to a second device, through that mains’ safety ground, and back via the building’s mains wiring. This ground current is largely inevitable and quite normal, and it really shouldn’t be a problem if the equipment is designed and grounded correctly. However, in some cases this ground current can find its way into the ground reference point of the electronics, and thus affect the equipment’s operation in some subtle, or sometimes not so subtle, way.

Running your laptop from its AC power supply can sometimes complete a ground loop — and in some cases that can be very bad news for the performance of a USB D-A converter.Running your laptop from its AC power supply can sometimes complete a ground loop — and in some cases that can be very bad news for the performance of a USB D-A converter.In the case of analogue equipment, if this ground current finds its way into an audio amplifier’s ground reference, it effectively gets mixed in directly with the wanted audio signal. Since the ground current is from the mains supply, it usually comprises a 50Hz (here in the UK; in some countries, including the USA, it’s 60Hz) fundamental with some harmonics, and we hear that as the familiar ‘ground-loop hum’.

In digital equipment, ground-loop currents might manifest as audible hums or buzzes, but more often than not they produce far more subtle effects and artifacts instead, because they end up interfering with the digital data in various ways, rather than the analogue signal. Consequently, ground-loop problems tend to alter the performance of D-A (and A-D) converters quite subtly — perhaps by modulating their ground references, or by inducing jitter into the clocking circuitry. Ground loops are very unlikely to corrupt pure data transfers between a computer and a hard drive, say, but may well affect any real-time conversion processes between the analogue and digital domains.

In the example you gave, it’s quite possible for a ground loop to be created between a mains-powered converter (and connected mains-powered amps/speakers) and a mains-powered laptop via the grounded RF shield within a USB or Firewire connecting cable. (The same applies to any external mains-powered hard drives too, of course). Disconnecting the mains adapter from the computer and running it on its internal battery obviously breaks that ground loop and removes whatever influence ground-loop currents were having.

As with analogue equipment, good design of internal grounding arrangements will minimise or negate these kinds of ground-loop issues and, again, as with analogue equipment, not every manufacturer has mastered this critical aspect of design. So some equipment might exhibit subtle problems, and some might not. With any ground-loop problems, the first step is to minimise the chances of a ground loop generating troublesome current flows by making sure that all connected equipment is plugged into adjacent mains power sockets (so that the mains safety earths are all at the same potential), not different sockets on different sides of the room!

If some of the equipment can’t be battery powered, the next step is to break the ground path between devices. Unfortunately, the simple and elegant solution for analogue ground loops — the line isolation transformer — isn’t so readily available for high-speed USB and Firewire connections.

USB isolators certainly exist, using either special ‘air-core’ pulse transformers or optocouplers. Sadly, though, most only support USB 1 full-speed operation at 12Mbps, not full high-speed USB 2 up to 480Mbps. Consequently, they might be fine for curing ground-loop problems with USB keyboards passing MIDI data, but probably aren’t adequate for multichannel audio interfaces.

BB Electronics make a commercial full-speed (12Mbps) USB isolator, and Analogue Devices offers the ADUM3160 and ADUM4160 isolator chips which have been used by Oleg Mazurov in a DIY isolator circuit. This also appears to be offered, ready built, by Olimex Ltd. Again, these are limited to a maximum data rate of 12Mbps.

A mastering engineer told me that, when playing back source files from his laptop into a high-quality USB-connected D-A, the masters sounded ‘worse’ if the laptop was plugged into its power supply, as opposed to running off the battery. He sent me ‘with and without’ comparisons and there was indeed quite a difference. He then went on to test the source files running from various USB, Firewire and internal hard drives, and maintains that internal drives for record or playback in real time (ie. not a data transfer) sound better than either USB or Firewire. What could be going on here?

SOS forum post

SOS Technical Editor Hugh Robjohns replies: I’ve heard many similar claims — in particular that USB thumb drives sound better than external hard-disk drives — and have experienced the same thing myself on occasion. I suspect the answer lies in our old nemesis, the humble ground loop.

Ground loops are formed when multiple devices are interconnected in such a way that their local ground references are at slightly different voltages, and a current can therefore flow around a loop from one device’s mains safety ground, through the connecting cables to a second device, through that mains’ safety ground, and back via the building’s mains wiring. This ground current is largely inevitable and quite normal, and it really shouldn’t be a problem if the equipment is designed and grounded correctly. However, in some cases this ground current can find its way into the ground reference point of the electronics, and thus affect the equipment’s operation in some subtle, or sometimes not so subtle, way.

Running your laptop from its AC power supply can sometimes complete a ground loop — and in some cases that can be very bad news for the performance of a USB D-A converter.Running your laptop from its AC power supply can sometimes complete a ground loop — and in some cases that can be very bad news for the performance of a USB D-A converter.In the case of analogue equipment, if this ground current finds its way into an audio amplifier’s ground reference, it effectively gets mixed in directly with the wanted audio signal. Since the ground current is from the mains supply, it usually comprises a 50Hz (here in the UK; in some countries, including the USA, it’s 60Hz) fundamental with some harmonics, and we hear that as the familiar ‘ground-loop hum’.

In digital equipment, ground-loop currents might manifest as audible hums or buzzes, but more often than not they produce far more subtle effects and artifacts instead, because they end up interfering with the digital data in various ways, rather than the analogue signal. Consequently, ground-loop problems tend to alter the performance of D-A (and A-D) converters quite subtly — perhaps by modulating their ground references, or by inducing jitter into the clocking circuitry. Ground loops are very unlikely to corrupt pure data transfers between a computer and a hard drive, say, but may well affect any real-time conversion processes between the analogue and digital domains.

In the example you gave, it’s quite possible for a ground loop to be created between a mains-powered converter (and connected mains-powered amps/speakers) and a mains-powered laptop via the grounded RF shield within a USB or Firewire connecting cable. (The same applies to any external mains-powered hard drives too, of course). Disconnecting the mains adapter from the computer and running it on its internal battery obviously breaks that ground loop and removes whatever influence ground-loop currents were having.

As with analogue equipment, good design of internal grounding arrangements will minimise or negate these kinds of ground-loop issues and, again, as with analogue equipment, not every manufacturer has mastered this critical aspect of design. So some equipment might exhibit subtle problems, and some might not. With any ground-loop problems, the first step is to minimise the chances of a ground loop generating troublesome current flows by making sure that all connected equipment is plugged into adjacent mains power sockets (so that the mains safety earths are all at the same potential), not different sockets on different sides of the room!

If some of the equipment can’t be battery powered, the next step is to break the ground path between devices. Unfortunately, the simple and elegant solution for analogue ground loops — the line isolation transformer — isn’t so readily available for high-speed USB and Firewire connections.

USB isolators certainly exist, using either special ‘air-core’ pulse transformers or optocouplers. Sadly, though, most only support USB 1 full-speed operation at 12Mbps, not full high-speed USB 2 up to 480Mbps. Consequently, they might be fine for curing ground-loop problems with USB keyboards passing MIDI data, but probably aren’t adequate for multichannel audio interfaces.

BB Electronics make a commercial full-speed (12Mbps) USB isolator, and Analogue Devices offers the ADUM3160 and ADUM4160 isolator chips which have been used by Oleg Mazurov in a DIY isolator circuit. This also appears to be offered, ready built, by Olimex Ltd. Again, these are limited to a maximum data rate of 12Mbps.

Tuesday, March 17, 2015

Monday, March 16, 2015

Q How do I achieve a reverb-drenched sound on a full mix?

Mike Senior

A lot of the music I’m enjoying seems to be going back to an old-school sound of being swamped in lush reverb. Examples include the recent Ray Lamontagne, Escondido, Damien Jurado and Jesse Woods recordings. For this effect, is it best to put the reverb on the master bus or to leave it to the mastering engineer? Do we assume they have excellent reverbs at hand? And if that’s the effect we’re going for, is it better to leave all of the tracks themselves completely dry? Would a high-pass filter on the reverb still be advised? Matt, via email

SOS contributor Mike Senior replies: First things first, reverb is almost always best applied during the mixing process, rather than at the mastering stage, because that way you can selectively target the effect to give your mix a sense of depth. Some parts might have more reverb to push them into the background, for instance, while others might be left drier to keep them subjectively closer to the listener. So, for example, in the first verse of Ray Lamontagne’s ‘Be Here Now’ there’s a lot less reverb on the lead vocal and acoustic guitar than on the piano and strings, hence the latter appear considerably further away from you.

If your songs trade on lush reverb, should you be putting a reverb on the master bus? Probably not...If your songs trade on lush reverb, should you be putting a reverb on the master bus? Probably not...As to whether the reverbs those productions are using are ‘excellent’, that’s a question of definition as much as anything — there’s no absolute quality benchmark when it comes to reverb processing for popular-music mixing, because different reverb types are often used for very different purposes. For example, there are many classic reverb units that are a bit rubbish at simulating convincing acoustic spaces, but which are popular for other reasons — perhaps they flatter the timbre of a certain instrument or enhance its sustain. However, I suspect from your stated references that you’ll get most joy from realistic acoustic emulations and smooth plate-style patches, both of which can be found very affordably these days, and may even be bundled within your software DAW system.

The difficulty with lush reverbs, however, is avoiding mix clutter, and high-pass filtering the reverb returns, as you suggest, is therefore usually advised to tackle this. More in-depth EQ may also be necessary, and the best way to tell if it’s needed is by toggling the reverb return’s mute button while you listen to the overall mix tonality. If the mix suddenly gets a bit ‘honky’ when you switch in the reverb, then that might be an indication that you need a bit of mid-range cut, say. Another trick that I find extremely handy in this kind of context is to precede the reverb with a tempo-sync’ed feedback delay, because this helps lengthen the sustain tail of the reverb without completely washing the mix out, and also keeps instruments from being pulled as far back in the depth perspective by the effect. I’d also recommend not feeding too many different instruments to the same reverb when you’re using long-decay patches, because that makes it easier to filter out elements of a specific instrument’s reverb that you don’t need, without affecting other parts, which can further improve the clarity of your overall mix texture.

A lot of the music I’m enjoying seems to be going back to an old-school sound of being swamped in lush reverb. Examples include the recent Ray Lamontagne, Escondido, Damien Jurado and Jesse Woods recordings. For this effect, is it best to put the reverb on the master bus or to leave it to the mastering engineer? Do we assume they have excellent reverbs at hand? And if that’s the effect we’re going for, is it better to leave all of the tracks themselves completely dry? Would a high-pass filter on the reverb still be advised? Matt, via email

SOS contributor Mike Senior replies: First things first, reverb is almost always best applied during the mixing process, rather than at the mastering stage, because that way you can selectively target the effect to give your mix a sense of depth. Some parts might have more reverb to push them into the background, for instance, while others might be left drier to keep them subjectively closer to the listener. So, for example, in the first verse of Ray Lamontagne’s ‘Be Here Now’ there’s a lot less reverb on the lead vocal and acoustic guitar than on the piano and strings, hence the latter appear considerably further away from you.

If your songs trade on lush reverb, should you be putting a reverb on the master bus? Probably not...If your songs trade on lush reverb, should you be putting a reverb on the master bus? Probably not...As to whether the reverbs those productions are using are ‘excellent’, that’s a question of definition as much as anything — there’s no absolute quality benchmark when it comes to reverb processing for popular-music mixing, because different reverb types are often used for very different purposes. For example, there are many classic reverb units that are a bit rubbish at simulating convincing acoustic spaces, but which are popular for other reasons — perhaps they flatter the timbre of a certain instrument or enhance its sustain. However, I suspect from your stated references that you’ll get most joy from realistic acoustic emulations and smooth plate-style patches, both of which can be found very affordably these days, and may even be bundled within your software DAW system.

The difficulty with lush reverbs, however, is avoiding mix clutter, and high-pass filtering the reverb returns, as you suggest, is therefore usually advised to tackle this. More in-depth EQ may also be necessary, and the best way to tell if it’s needed is by toggling the reverb return’s mute button while you listen to the overall mix tonality. If the mix suddenly gets a bit ‘honky’ when you switch in the reverb, then that might be an indication that you need a bit of mid-range cut, say. Another trick that I find extremely handy in this kind of context is to precede the reverb with a tempo-sync’ed feedback delay, because this helps lengthen the sustain tail of the reverb without completely washing the mix out, and also keeps instruments from being pulled as far back in the depth perspective by the effect. I’d also recommend not feeding too many different instruments to the same reverb when you’re using long-decay patches, because that makes it easier to filter out elements of a specific instrument’s reverb that you don’t need, without affecting other parts, which can further improve the clarity of your overall mix texture.

Saturday, March 14, 2015

Friday, March 13, 2015

Q Will a Shure SM58 sound better on vocals than a condenser mic in a lively room?

Sound Advice : Miking

I have to record a vocal track in a fairly live–sounding room. I’ve got a couple of reasonable condensers, which I could use with a Reflexion Filter, but I’m considering using a Shure SM58 [cardioid dynamic mic], purely as it might pick up less room ambience. Is the drier sound I’ll get with the SM58 worth the slight loss of detail? And, for that matter, would the SM58 actually sound drier in the first place?

Hugh Robjohns

SOS Forum Post

Can a dynamic mic like the SM58 really help tame room sounds when recording vocals? The answer may be yes — but probably not for the reason you’d think!Can a dynamic mic like the SM58 really help tame room sounds when recording vocals? The answer may be yes — but probably not for the reason you’d think!SOS Technical Editor Hugh Robjohns replies: Plenty of big names record with an SM58 to get the right performance, so I wouldn’t worry too much about any potential for ‘loss of detail’. From that point of view, just try it out and see how happy you are with the sound you get. On the matter of avoiding the capture of unwanted room sound, working any mic very close will minimise room sound, simply because you’re increasing the ratio of wanted signal (voice) to unwanted signal (room). And as it’s easier to use an SM58 closer than most capacitor mics, it does seem to make sense. Of course, getting up close and personal with a cardioid mic like this will bring the proximity effect bass boost into play, so I’d advise doing a quick test recording. Like its bigger brother the SM7, the SM58 is actually designed to be flatter at the low end when used up close, with the bass response falling off the further away you get. An alternative would be to try an ElectroVoice RE20 — another dynamic mic that can be used up close, but one which has been acoustically designed to control the proximity effect in that kind of application. All this said, though, personally, I’d much rather tame the room with some well–placed duvets behind and to the sides of the vocalist — which will have a much more beneficial effect than using the Reflexion Filter on its own — and use a capacitor mic, provided it is the best choice for the voice and application in question.

I have to record a vocal track in a fairly live–sounding room. I’ve got a couple of reasonable condensers, which I could use with a Reflexion Filter, but I’m considering using a Shure SM58 [cardioid dynamic mic], purely as it might pick up less room ambience. Is the drier sound I’ll get with the SM58 worth the slight loss of detail? And, for that matter, would the SM58 actually sound drier in the first place?

Hugh Robjohns

SOS Forum Post

Can a dynamic mic like the SM58 really help tame room sounds when recording vocals? The answer may be yes — but probably not for the reason you’d think!Can a dynamic mic like the SM58 really help tame room sounds when recording vocals? The answer may be yes — but probably not for the reason you’d think!SOS Technical Editor Hugh Robjohns replies: Plenty of big names record with an SM58 to get the right performance, so I wouldn’t worry too much about any potential for ‘loss of detail’. From that point of view, just try it out and see how happy you are with the sound you get. On the matter of avoiding the capture of unwanted room sound, working any mic very close will minimise room sound, simply because you’re increasing the ratio of wanted signal (voice) to unwanted signal (room). And as it’s easier to use an SM58 closer than most capacitor mics, it does seem to make sense. Of course, getting up close and personal with a cardioid mic like this will bring the proximity effect bass boost into play, so I’d advise doing a quick test recording. Like its bigger brother the SM7, the SM58 is actually designed to be flatter at the low end when used up close, with the bass response falling off the further away you get. An alternative would be to try an ElectroVoice RE20 — another dynamic mic that can be used up close, but one which has been acoustically designed to control the proximity effect in that kind of application. All this said, though, personally, I’d much rather tame the room with some well–placed duvets behind and to the sides of the vocalist — which will have a much more beneficial effect than using the Reflexion Filter on its own — and use a capacitor mic, provided it is the best choice for the voice and application in question.

Thursday, March 12, 2015

Saturday, March 7, 2015

Q What makes a vocal part's harmonics louder than the fundamental pitch?

Sound Advice : Mixing

I’m recording a singer whose pitch is pretty good, but on higher notes the sound gets a little harsh. Looking at the waveform on an spectrum analyser, I see that the harmonics (first octave up, etc.) have as much amplitude as the main note. I am EQ’ing a bit to make it sound less harsh, successfully to some degree, but I was just wondering whether it’s unusual for harmonics in the sung word to be as loud or even louder than the actual note?

Mike Senior

Tim Jacobs, via email

A dynamic equaliser such as Melda’s MDynamicEQ is one of the best tools for dealing with the harsh–sounding single–frequency resonances generated by some singers.A dynamic equaliser such as Melda’s MDynamicEQ is one of the best tools for dealing with the harsh–sounding single–frequency resonances generated by some singers.SOS Contributor Mike Senior replies: Not at all. The main reason is that vowel sounds are generated by creating quite powerful resonances in the throat, nose and mouth, and a harmonic can easily overpower a pitched note’s fundamental under such circumstances. In my experience, this also tends to happen more often when the singer is straining for higher pitches or simply just singing very loud, as both these situations can favour harmonics over fundamental in the timbral structure of the note. The situation isn’t helped by the practice of close–miking, which will tend to unnaturally highlight mouth resonances that wouldn’t be nearly as obtrusive when mixed more evenly with resonances from chest and sinuses in normal listening situations. Operatic singers can be particularly problematic too, because of the way they deliberately exaggerate their vocal resonances for the purposes of projection in order to compete on even terms with backing instruments in typical performance situations.

From a mixing perspective, this can be a tremendously fiddly thing to try to remedy with processing, because effectively you’re chasing a moving target. On some notes, the unwanted resonance is there, but on others it isn’t, depending on the interaction between the pitch, volume, and vowel–sound being used. That means you can’t use simple EQ to scotch the resonance without it wrecking non–resonant notes. I usually do one of two things: either I automate narrow EQ cuts to rein in those over–strong harmonics just on the harsh–sounding notes, or I program a dynamic EQ plug–in to do the same thing. (I rather like Melda’s MDynamicEQ for this.) Fortunately, there are usually only two or three troublesome harmonics in any one vocal recording, and they’re usually all in the 1kHz–4kHz range, which limits the laboriousness of the endeavour to a degree.

While we’re on the subject, this issue is by no means restricted to vocals. Many instruments simply aren’t large enough to project much level at the fundamental frequencies of their lowest notes, so they’ll naturally give you a harmonics–heavy balance, and that’s not usually a mixing problem — although you can also get sporadically overbearing harmonics with instruments too, especially things like drums, acoustic guitars, and wah–wah electric guitars.

I’m recording a singer whose pitch is pretty good, but on higher notes the sound gets a little harsh. Looking at the waveform on an spectrum analyser, I see that the harmonics (first octave up, etc.) have as much amplitude as the main note. I am EQ’ing a bit to make it sound less harsh, successfully to some degree, but I was just wondering whether it’s unusual for harmonics in the sung word to be as loud or even louder than the actual note?

Mike Senior

Tim Jacobs, via email

A dynamic equaliser such as Melda’s MDynamicEQ is one of the best tools for dealing with the harsh–sounding single–frequency resonances generated by some singers.A dynamic equaliser such as Melda’s MDynamicEQ is one of the best tools for dealing with the harsh–sounding single–frequency resonances generated by some singers.SOS Contributor Mike Senior replies: Not at all. The main reason is that vowel sounds are generated by creating quite powerful resonances in the throat, nose and mouth, and a harmonic can easily overpower a pitched note’s fundamental under such circumstances. In my experience, this also tends to happen more often when the singer is straining for higher pitches or simply just singing very loud, as both these situations can favour harmonics over fundamental in the timbral structure of the note. The situation isn’t helped by the practice of close–miking, which will tend to unnaturally highlight mouth resonances that wouldn’t be nearly as obtrusive when mixed more evenly with resonances from chest and sinuses in normal listening situations. Operatic singers can be particularly problematic too, because of the way they deliberately exaggerate their vocal resonances for the purposes of projection in order to compete on even terms with backing instruments in typical performance situations.

From a mixing perspective, this can be a tremendously fiddly thing to try to remedy with processing, because effectively you’re chasing a moving target. On some notes, the unwanted resonance is there, but on others it isn’t, depending on the interaction between the pitch, volume, and vowel–sound being used. That means you can’t use simple EQ to scotch the resonance without it wrecking non–resonant notes. I usually do one of two things: either I automate narrow EQ cuts to rein in those over–strong harmonics just on the harsh–sounding notes, or I program a dynamic EQ plug–in to do the same thing. (I rather like Melda’s MDynamicEQ for this.) Fortunately, there are usually only two or three troublesome harmonics in any one vocal recording, and they’re usually all in the 1kHz–4kHz range, which limits the laboriousness of the endeavour to a degree.

While we’re on the subject, this issue is by no means restricted to vocals. Many instruments simply aren’t large enough to project much level at the fundamental frequencies of their lowest notes, so they’ll naturally give you a harmonics–heavy balance, and that’s not usually a mixing problem — although you can also get sporadically overbearing harmonics with instruments too, especially things like drums, acoustic guitars, and wah–wah electric guitars.

Thursday, March 5, 2015

Q What made me hear a phantom low note when singing in a choir?

Sound Advice : Recording

I recently attended a choir workshop in a large, square, empty church. The space had incredible acoustics and we measured around six seconds of reverb, even with 40 people in the room. At one point, the tutor had us all stand in a circle and sing particular notes of a scale increasing in pitch at the following intervals: 1, 5, 1, 3, 5, flat 7, 1 and 2, starting an octave below middle C. We all just held an ‘ah’ sound at our given note and, very quickly, a tone two octaves below middle C was clearly audible, appearing to come from different parts of the room — it sounded like there was an invisible nine–foot man singing in the middle of us! What on earth was going on, and can it be achieved with other instruments? Can it be used in any practical way in music–making?

Hugh Robjohns

Ellen Maltby, via email

SOS Technical Editor Hugh Robjohns replies: This sounds amazing! I suspect that the note intervals generated an interference beat–tone which resonated as a standing wave within the hall — the standing wave bit seems certain given your comment about it being audible at specific places within the room. I imagine the same would be possible with anything that generated suitably sustained tones of the right pitch — relatively pure–toned instruments, rather than very harmonically complex ones.

SOS Technical Editor Hugh Robjohns replies: This sounds amazing! I suspect that the note intervals generated an interference beat–tone which resonated as a standing wave within the hall — the standing wave bit seems certain given your comment about it being audible at specific places within the room. I imagine the same would be possible with anything that generated suitably sustained tones of the right pitch — relatively pure–toned instruments, rather than very harmonically complex ones.

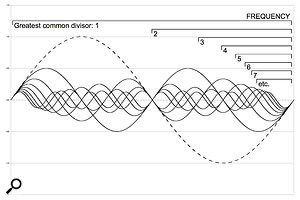

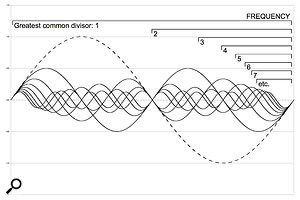

A ‘missing fundamental’ (dotted line) can sometimes be ‘heard’ if enough of its harmonics are present.A ‘missing fundamental’ (dotted line) can sometimes be ‘heard’ if enough of its harmonics are present.Could it be practical for music-making? If it’s related to standing waves, then it will be totally building specific, in which case it might be useful for a piece that will only ever be performed in the one place, but it would fail if performed elsewhere (assuming it didn’t match the precise dimensions). I have in the back of my mind that some monastic choral music only works in the monasteries where it was written — and that may be for similar reasons... or that might just be the random regurgitations of a befuddled mind!

SOS contributor Mike Senior adds: I’d agree with Hugh that acoustic interference effects almost certainly play an important part in creating the ‘phantom bass singer’ you heard. In other words, the frequency differences between note fundamentals are creating interference ‘beats’ at frequencies in the audible spectrum — in your specific case at a frequency of around 65.5Hz for C2 (two octaves below middle C). The chord you mention provides maximum opportunity for this, because it neatly arrays the fundamental of each note 65.5Hz apart from both its neighbours — assuming, that is, that the choir are singing unaccompanied and tuning amongst themselves by ear, using what we call ‘just’ intonation rather than the equal–tempered intonation used by most keyboard instruments.

However, the acoustic aspect of this effect was probably only part of what you heard. A very similar phenomenon has been demonstrated to occur within the physiological apparatus of the inner ear (so it doesn’t rely on tones mixing in the air), as well as entirely as a perceptual illusion (difference tones can be created even between sounds fed independently to separate ears over headphones — fairly freaky if you think too hard about it!).But why do you hear this added tone as forming part of the choir, rather than as some kind of separate note? I think this has something to do with the ‘missing fundamental’ effect, whereby we judge the pitch of a note according to its fundamental frequency, regardless of whether we can actually hear the fundamental. In other words, we’re very adept at perceptually extrapolating what a note’s fundamental should be even when we’re only presented with the upper partials of that note’s harmonic series. A common audio–engineering ramification of this is that we can still recognise low–pitched notes when they’re coming through a small speaker that could never produce their fundamental frequencies.In your situation, I reckon that your brain is (incorrectly) identifying the presence of a C2 note within the choir texture, simply because so many of the important frequency components of that note’s harmonic series are present. Your perception is filling in the missing fundamental, making it appear as if there’s someone in the choir channelling the spirit of Barry White!So what can we use this effect for? Not much, to be honest, simply because you need a stack of quite pure tones in ‘just’ intonation to create appreciable difference–tone levels, and the appeal of that kind of chordal texture is fairly limited, not least because most Western music is based around the deliberate tuning impurities of the equal–tempered scale. Plus, the relationship between musical intervals and the difference tones they generate is rather counter–intuitive from a musical perspective — whereas a C3–G3 interval produces a difference tone at C2, the C3–F3 and C3–A3 intervals give, respectively, difference tones a fifth below (F1) and a fourth above (F2). In practice, this pretty much rules out using difference tones creatively for most musicians, unless your music–making tends to involve burning a lot of incense. In fact, the most practical application of the idea I’ve discovered is where some church organs use difference tones (termed ‘resultant tones’) to mimic the effects of deep–pitched pipes that would be physically too long to install.

I recently attended a choir workshop in a large, square, empty church. The space had incredible acoustics and we measured around six seconds of reverb, even with 40 people in the room. At one point, the tutor had us all stand in a circle and sing particular notes of a scale increasing in pitch at the following intervals: 1, 5, 1, 3, 5, flat 7, 1 and 2, starting an octave below middle C. We all just held an ‘ah’ sound at our given note and, very quickly, a tone two octaves below middle C was clearly audible, appearing to come from different parts of the room — it sounded like there was an invisible nine–foot man singing in the middle of us! What on earth was going on, and can it be achieved with other instruments? Can it be used in any practical way in music–making?

Hugh Robjohns

Ellen Maltby, via email