Welcome to No Limit Sound Productions. Where there are no limits! Enjoy your visit!

Welcome to No Limit Sound Productions

| Company Founded | 2005 |

|---|

| Overview | Our services include Sound Engineering, Audio Post-Production, System Upgrades and Equipment Consulting. |

|---|---|

| Mission | Our mission is to provide excellent quality and service to our customers. We do customized service. |

Saturday, November 29, 2025

Friday, November 28, 2025

Cubase 11 Scale Assistant

Having used Chord Pads to create an initial chord sequence, the Chord Editing panel allows you to easily add further interest via the Inversions and Drop Notes options without changing the actual chords.

Having used Chord Pads to create an initial chord sequence, the Chord Editing panel allows you to easily add further interest via the Inversions and Drop Notes options without changing the actual chords.

In need of melodic inspiration? Cubase 11's Scale Assistant can help.

In the Pro, Artist and Elements versions of Cubase 11, the already impressive suite of compositional aids was joined by the new Scale Assistant. This is potentially very useful as a corrective tool — if the piano keyboard isn’t your primary instrument, for example, or your music theory is a bit rusty — but it can also be great when you need a little melodic inspiration.

For our example this month, I’ll construct the bare chordal and melodic bones of an eight‑bar musical ‘chunk’, highlighting some easy ways to play with repetition and variation, and then use Cubase’s Scale Assistant to lend a creative hand when developing a melody to sit within this chunk.

Strike The Right Chord Sequence

We’ll start with two instances of HALion Sonic SE, one with a simple electric piano (for the chord sequence) and the other a synth lead patch (for the melody). You can also add a basic drumbeat or loop to provide a rhythmic bed if you find that helps.

I generally like to start songwriting with a chord sequence and, as my own piano skills tend towards the ‘one hand, three fingers’ level, I’m a big fan of using Cubase’s Chord Pad and Chord Editing features to experiment. I discussed the Chord Pads back in SOS May 2015 and for this example I followed the same basic methodology, populating the pads with some basic chords in D major (D, G, A and Bmin), with two different voicings of each chord. Experimenting with different chord sequences, including the ability to add both rhythm and dynamics, then becomes easy, single‑finger work. I opted for a classic (indeed, cliché!) four‑bar sequence that repeats twice but with slightly different rhythm/dynamics in the final two bars the second time around.

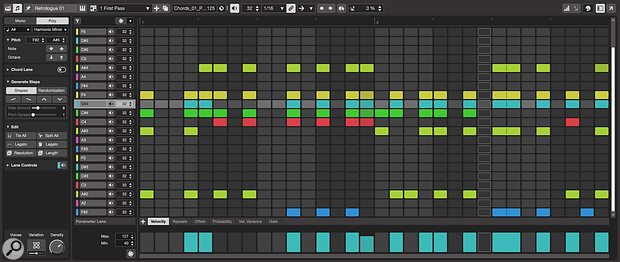

While the Chord Pad system lets you add chord voicing variations on the fly, I’ll often use the Key Editor’s Chord Editing panel for this stage and the most useful options here are provided by the Inversions and Drop Notes sections. These allow you to select any chord(s) in your MIDI clip and adjust the voicing — so you get to keep the chord sequence while still developing a sense of musical movement in the performance. As shown in the screenshot, I’ve used these options to change the voicing of the last chord hit of each bar and then, in the final two bars to add a sense of upwards motion to the chords.

Melody, Call My Assistant

The Scale Assistant works within the Key Editor so, for our melody, the first step is to create an empty eight‑bar MIDI clip on our ‘melody’ track. With this clip open in the Key Editor, you can expand the Scale Assistant pane in the Inspector. Choosing ‘Use Editor Scale’ then lets us use the drop‑down options to select the key of D and the major scale to match our chord sequence. As we’re going to use the note‑drawing tools to create some melody ideas, Snap Pitch Editing is probably the most important of the other tickboxes. With this engaged, if we add or edit notes within the clip, they’ll automatically snap to notes within the scale.

If you wish, you can change the note lane display in the Key Editor. I prefer the Key Editor’s Pitch Visibility ‘Show Pitches from Scale Editor’ option over the Scale Assistant’s ‘Show Scale Note Guides’ option. The former simply removes note lanes for all out‑of‑scale notes from the display, whereas the latter changes the pattern of light‑grey/dark‑grey shading of the note lanes. I tend to find the latter confusing, given how ingrained it is to think of this shading as reflecting the pattern of white/black piano keys.

Finally, before we start to create melody ideas, engage Snap (to Grid) and select a suitable quantise resolution. The latter will define the initial spacing of any notes in our melody. I stuck with 1/8th notes throughout the worked example, but you can experiment with shorter/longer notes or different note lengths in different sections (sets of bars) of your melody.

My initial melody created using the Line tool but with Scale Assistant ensuring every note created is in the required key/scale.

My initial melody created using the Line tool but with Scale Assistant ensuring every note created is in the required key/scale.

Now for the fun part: ‘drawing’ our initial melody idea. We could just start adding individual notes with the Pencil tool, but there’s more fun to be had with the Line tool. The straight‑line option is the best; if you draw a straight line in the note area, this line is transformed into a sequence of notes that follow the slope of the line. But thanks to the Scale Assistant these notes are also snapped to the nearest note in the scale.

Simply by drawing a sequence of short (for example, one bar long) straight lines, each with a different direction/steepness of slope, you can create an initial ‘flow’ for your melody idea, with rises and falls in pitch — and all devoid of any out‑of‑key notes! The screenshot shows my worked example and I’ve annotated it to give an idea of the different line segments I drew across the eight bars to create this initial pattern of notes.

Write & Rewrite

Of course, this initial idea is unlikely to be the finished deal, so some editing will almost certainly now be required. After making a copy of the MIDI clip (in case you want to return to the original when it all goes horribly wrong), this editing can be done in three stages, although you can move back and forth between these stages as the melodic ideas take shape.

First, as you cycle playback of the chord/melody parts, use the mute tool to swap notes in/out of the melody. This stage might thin out the number of notes and, as a result, help define a rhythmic pattern for the melody. Second, feel free to move a note or two up/down if this makes the melody more interesting. Third, you don’t have to use every bar of the original melody created by the drawing process; as you refine things, look for single‑bar phrases that you like. Once you have two or three of these, discard the rest, and assemble these very best bits across the eight bars to create the required sense of melodic repetition.

The next screenshot shows what I ended up with after this editing process. Aside from there being fewer notes and some velocity variation added, the key thing to note is the result of the third editing stage discussed above. Having done stages one and two, I identified a few of my original bars (1, 4 and 7+8) and reconstructed my eight‑bar sequence based around only those.

After a few minutes editing work, the final eight‑bar melody tries to find the ideal balance between repetition and variation.

After a few minutes editing work, the final eight‑bar melody tries to find the ideal balance between repetition and variation.

Within the screenshot, I’ve highlighted this in the Marker track at the top of the Key Editor display. The phrase from the first bar has actually been repeated five times; in bars 1, 2, 3, 5 and 6. However, in bars 2 and 6 it has been shifted down by two notes in the scale (it starts on B2 rather than D2). These alternative bars have the same rhythmic and melodic pattern (so there’s a sense of repetition), but the different starting pitch adds a sense of variety; it’s different but not ‘too different’.

Pitch‑shifting these notes is easy with Snap Pitch Editing engaged; you can simply select the required notes and drag them up/down; Scale Assistant makes sure everything stays in key (this makes it a great tool for creating harmony parts, but that’s a topic for another day!). Finally, Phrase 2 (in bar 4) adds a twist, in rhythm and pitch, so things don’t become too repetitive, while bars 7+8 add further variety and bring the overall eight‑bar sequence to a conclusion.

Are We There Yet?

Well, no. But our song is now started — and that’s often the hardest step. Hopefully, you can see the principles at play here and how the Scale Assistant makes this sort of melodic experimentation very easy, regardless of your piano playing or music theory skills. Of course, the same process can then be repeated to create the other song sections. You then add a little work on the overall song structure (more careful use of repetition will be required here) before finally flexing your production skills to transform your bare‑bones song idea into a fully fledged, ear‑candy‑laden, international hit. Just don’t forget a songwriting credit for Cubase!

Thursday, November 27, 2025

Wednesday, November 26, 2025

Cubase 11: Pulse & Ticker

The Sampler Control panel is easy to use but still provides plenty of creative sound design options. Here, I’m turning a kick drum sample into a pulse sound.

The Sampler Control panel is easy to use but still provides plenty of creative sound design options. Here, I’m turning a kick drum sample into a pulse sound.

Cubase 11’s Sampler Track makes a great starting point for percussive sound design.

Groove Agent SE 5 offers all Cubase users a good selection of drum and percussion sounds but sometimes it’s nice to roll your own, particularly if you’d like some alternative percussive sounds such as pulses and tickers — a favourite of film/TV composers but also useful rhythmic ear candy in all sorts of electronic music. Cubase has some cool tools that can be used for this type of task, so let’s explore.

One Slice Or Three?

For this worked example (and the audio clips that accompany it on the SOS website: https://sosm.ag/cubase-0621), we’ll create three types of sound: a deep pulse (heartbeat); a ticker (clock‑like sound); and a short woosh riser. There are plenty of options for sourcing sounds to start with, including hitting some objects around your studio/house but, for the example I simply picked a drum loop with sonic potential. Cubase provides various options for percussive sound design, but for this example I’ll focus on the Sampler Track, whose super‑friendly UI makes life particularly easy.

Create an empty Sampler Track and open the Sampler Control panel in the Lower Zone, and you can drag and drop your drum loop into this panel. Switching the Playback Mode to Slice (new in the Sampler Track for Cubase 11) will, by default, slice your loop based on its obvious transients, then map the slices across the MIDI note range, so you can easily audition each hit to identify the best candidates for sound design.

All The Trimmings

If you click on a slice you like, it gets highlighted in orange. Ideally, you’d now be able to use the Sampler Control’s Transfer To New Instrument button to send the selected slice to a new instance of the Sampler Track but sadly it isn’t one of the available ‘new instrument’ destinations — hopefully, that’s a feature that Steinberg might add at some stage, but the workaround is easy enough. Having taken careful note of the position of the drum hit within the loop, switch the Playback Mode to Normal, then click and drag to highlight the required part of the loop (select slightly more than the original slice itself; we will tidy up the selection in a minute). Then, on the Sampler Control Toolbar, press Trim Sample (it looks like a pair of square brackets). The rest of the original waveform will disappear so you can focus on your selection. The waveform outside the selection hasn’t been deleted; it is just hidden from view.

To isolate a second slice from our loop, duplicate the first instance of the Sampler Track. Then, on the Toolbar, press Revert To Full Sample. The full loop will reappear and you can isolate a further source. This process can be repeated until you have the required number of sounds and Sampler Tracks.

The new Slice mode is great for auditioning sounds in a drum loop, but it would be even better if you could then send a selected slice to a new Sampler Track instance!

The new Slice mode is great for auditioning sounds in a drum loop, but it would be even better if you could then send a selected slice to a new Sampler Track instance!

Just Do It

Given our ‘low pulse’, ‘ticker’ and ‘short riser’ targets, I picked as my starting points a kick drum, hi‑hat and a splashy electronic cymbal sound. With each on its own Sampler Track, you can fine‑tune the selection start and end points in waveform displays. Now for the fun part: tweaking these starting points. With sound design, there aren’t really any rules: you just experiment until something sounds good (or weird, or wonderful, the choice is yours!). Still, a few directions naturally suggest themselves with this sort of material...

For the kick drum, I used the Sampler Control panel’s Pitch section to pitch‑shift the sample down one octave, to emphasise the low end. In the Amp Mod section, by adjusting the envelope’s attack stage, you can transition between a harder sound (with more of the initial ‘click’) to a softer one. I went with the latter here, for a more subtle pulse sound. In the Playback Mode section, I also selected Audiowarp’s Solo mode so that I could experiment with the Formant control. This is often used to avoid weird artefacts when pitch‑shifting vocals, but it’s ripe for abuse in sound design and can be great for ‘adding some weird’. In the Filter section, I lowered the low‑pass filter’s cutoff to remove some high end and applied a dollop of Resonance and Drive for extra interest.

The Sampler Control panel’s options may be easy to use, but they can radically alter your underlying sounds.

For the ticker sound, I shifted the pitch of my hi‑hat sample up two octaves. I then used a band‑pass filter and set the cutoff and resonance controls to shape the overall character.

For the short riser, I used the Reverse button on the Sampler Control’s Toolbar to reverse the cymbal sample. I then used the waveform display’s fade‑in option to emphasise the rise in volume of the reversed sound with time, applied some fairly extreme pitch‑shifting (down 3 octaves) in the Pitch section, and added a low‑pass filter in the Filter section. The result was almost like white noise and the Playback mode presents some interesting choices for this kind of sound. In Normal mode, both pitch and playback speed (resulting in longer or shorter rise times) can be controlled by MIDI note pitch. But if you select Audiowarp playback and then the Solo Mode and Tempo Sync options, while you’ll still get changes in the riser pitch with different MIDI notes the time of the riser will remain the same in all cases. Both configurations can be useful — which is best depends on the composition.

The Sampler Control panel’s options may be easy to use, but they can radically alter your underlying sounds. Still, Cubase has plenty of other processing options. The various saturation or distortion plug‑ins are always worth trying but more basic tricks are worth a try too: it’s well worth experimenting with low/high‑cut EQ in the Channel Settings window, to refine the ‘space’ occupied by each of your sounds so they don’t tread on each other’s toes. If your DIY sounds are being used to create a ‘tension’ cue (as in the accompanying audio examples), the addition of some reverb and delay would be in order too; a little dotted 1/8th note delay on a ticker‑style sound can provide some cool rhythmic options.

Perform As One

As things currently stand, you have to create separate MIDI parts for each individual Sampler Track to build a complete performance and, personally, I prefer to program parts for these sorts of percussion sounds on a single track. This makes for easier MIDI editing or use of MIDI plug‑ins such as Beat Designer or Step Designer to create pattern‑based performances combining all the sounds. If your Sampler Track sound design ‘kit’ consists of up to four sounds, there’s a neat solution to this, in the form of MIDI Sends.

Using MIDI Sends on a ‘master’ MIDI track allows you to create and edit your MIDI performance more easily while still sending the MIDI data to multiple Sampler Tracks.

Using MIDI Sends on a ‘master’ MIDI track allows you to create and edit your MIDI performance more easily while still sending the MIDI data to multiple Sampler Tracks.

First, create an empty MIDI track that’s not associated with any virtual instrument. This will be your ‘master’ MIDI track. In its Inspector panel open the MIDI Sends panel. Once you activate a send slot, you can click on the MIDI output field and specify where the MIDI data will be sent… including any Sampler Track! If you leave the respective Effects fields empty, all MIDI data on this ‘master’ MIDI track will be passed to the MIDI output field destination unaltered.

Then, for each Sampler Track, use the mini keyboard display at the base of the Sampler Control panel to specify a MIDI note range that each track will respond to. I specified C2‑B2 for my low pulse sound, C3‑B3 for my ticker and C4‑C7 for my short rise sound. The ‘master’ MIDI track can then be used to record and edit the MIDI performance for all three sounds, much as you would with a VSTi drum kit but with the convenience of the super‑easy sample/sound design offered by separate Sampler Tracks.

One I Made Earlier

There is one more step that’s optional but also very useful. If you select both your master MIDI track and all the associated Sampler Tracks, and then right‑click, you can choose ‘Save Track Preset’ from the pop‑up menu. This creates a multitrack‑based Track Preset that you’ll be able to recall in any future project. Once you have recalled it, your master MIDI track and all the Sampler Tracks will reappear in your new project, with all the samples and sound design settings intact. Just remember to check that the audio output routing for the Sampler Tracks is suitable for the new project and you can start work on your next cue.

Tuesday, November 25, 2025

Monday, November 24, 2025

Steinberg Cubase 15 arrives

The latest version of Steinberg’s hugely popular DAW software has just launched,

bringing with it an array of new features that promise to set a new standard for

music-production software. Cubase 15 includes new tools aimed at everyone from

beginners to blockbuster composers, and as always, comes in three versions that

cater to a wide range of use cases and budgets.

What’s New?

Standout features of the latest version include an update to the DAW’s Expression Maps system, which now boasts deeper integration with the Key Editor and Score Editor, as well as benefitting from improved performance realism thanks to per-articulation attack compensation. The Pattern Editor, meanwhile, has been kitted out with a new pattern sequencer with monophonic and polyphonic modes, while the collection of Modulators introduced in Cubase 14 has been expanded to include new tools.

The Pattern Sequencer kits Cubase 15 out with a built-in monophonic/polyphonic step sequencer.

The Pattern Sequencer kits Cubase 15 out with a built-in monophonic/polyphonic step sequencer.

Starting a new project is said to be smoother than ever courtesy of a redesigned Hub that includes resizeable sections, audio setup and project preview along with some powerful search and filter tools. Collaboration has also been improved with the DAWproject format now able to share sessions across all editions of Cubase, Cubasis and other supported DAWs, without losing any of a project’s structure.

Remixing, rebalancing and creative sampling have been enhanced with AI-powered separation tools, and new automation shortcuts automatically show and prioritise the last-touched parameter, adding a simplified menu for quick access and editing, while volume and pan can be added directly to the Track Controls Area. There’s also a new quick export options that offer a quicker way to export bounces mid-session.

New effects include UltraShaper, which is said to take dynamics processing to a new level with a combination of transient shaping, clip limiting and EQ, while Omnivocal (currently in beta) uses Yamaha’s cutting-edge vocal synthesis to create expressive, human-like singing voices. There’s also PitchShifter, which features real-time creative and corrective pitch-shifting, formant preservation, saturation modes, stereo unlinking and a ±24 semitone range. All of the DAW’s stock plug-ins now support GUI scaling, and some additional content has been added to the likes of Drum Machine and Writing Room Synths.

Other Improvements

Alongside the headline features, Cubase 15 also includes a selection of more minor additions and improvements. These include an updated Score Editor with new tools for faster, more inspiring notation; the ability to instantly replace samples in the Sampler Track via MediaBay with filter-based browsing shortcuts; and macOS support for native full-screen mode and pinch-to-zoom gestures on compatible devices.

“We worked closely with our community to refine what matters most, turning valuable feedback into meaningful improvements that make everyday workflows smoother and more intuitive. This release builds naturally on the foundation of the previous version — it feels like the perfect evolution, marking a major leap forward for Cubase.” - Matthias Quellmann, Senior Marketing Manager, Steinberg

Compatibility

Cubase 15 is supported on PCs running Windows 10 or higher, and Macs running macOS 15 and above.

Pricing & Availability

Cubase 15 is available now, with pricing as follows:

- Cubase Pro 15: $579.99.

- Cubase Artist 15: $329.99.

- Cubase Elements 15: $99.99

A range of crossgrade and upgrade discounts are available. More information can be found on the Steinberg website.

Published 5/11/25

Saturday, November 22, 2025

Friday, November 21, 2025

Cubase 11: SuperVision Explained

SuperVision’s Loudness and Wavescope modules, with instances placed before and after the master bus processing chain (top‑right). The visual feedback about the changes in the loudness and waveform can help to inform your processing decisions.

SuperVision’s Loudness and Wavescope modules, with instances placed before and after the master bus processing chain (top‑right). The visual feedback about the changes in the loudness and waveform can help to inform your processing decisions.

Cubase 11’s new SuperVision plug‑in makes it easier for Pro and Artist users to trust their ears.

Gavin Herlihy’s ‘Why Your Ears Are Lying To You’ article in SOS May 2021 (http://sosm.ag/why_ears_lie) was a sobering reminder that you can’t always trust what your ears are telling you. This is why it’s helpful when recording, mixing and mastering audio to have good metering options to support your listening‑based decision making, and the Pro and Artist editions of Cubase 11 added a powerful new audio analysis and metering plug‑in: SuperVision. I can’t hope to cover its 18 different analysis modules in detail here, but by way of an introduction, I’ll consider how it might help with two common practical mixing tasks: setting your master bus processing and checking for lead vocal masking.

First, though, I need to go over some basics. SuperVision is an insert plug‑in, so you can use it to visualise your audio at any point in a signal chain. In addition, you can also use multiple instances in a project. By default, a new instance opens with a single Level module. However, the tool‑strip at the top includes a drop‑down menu to select from any of the 18 module types. The two top‑right buttons allow you to split the display of the selected module vertically or horizontally, to add a further module, and you can repeat this process to display up to nine modules in a single instance. You can resize the overall plug‑in window and customised layouts of modules can be saved as presets for instant recall.

The ‘cog’ button opens a further options page for the currently selected module (I’ll discuss some examples below), and other useful buttons in this tool strip allow you to pause, hold and reset the values in the current module. Holding Alt/Option while clicking on the Pause or Reset buttons applies the action to all modules in the plug‑in. Usefully, you also have the option of resetting a module’s display each time playback commences.

Master Bus Processing

While not everybody is a fan of mix‑bus processing, it’s common practice either to mix into a processing signal chain on your master bus or to add some master‑bus processing in the final stages of a mix; EQ, dynamics processing and saturation are all commonly applied. Precisely how much of this processing (all, some or none) you actually ‘print’ to your final mix is a broader question, but if you’re going to export your mix with the processing in place, it’s helpful to have as much information as possible about the changes that result from this processing. A number of SuperVision’s modules can help here, but I’ll focus on a combination of two: Loudness and Wavescope (shown above).

The Loudness module provides a pretty comprehensive numerical and visual summary of the loudness of an audio signal. The exact display can be customised in the Settings window and in the screenshot I’ve selected LUFS and the EBU +18dB display scale (I’ve left all other settings at their defaults). As well as a True Peak value, you get the three different standard time‑based averages of loudness: Momentary Max, Short‑Term and Integrated, measured over 100ms, three seconds, and the whole playback period, respectively. Typical targets for streaming services are ‑1.0dB for True Peak and ‑14 LUFS for Integrated loudness but, whatever loudness targets you’re aiming for, SuperVision lets you see if you are hitting them. The Range values and visual display show the dynamic range, with higher values indicating greater differences between the loudest and quietest sections of your audio. This can be a useful additional indicator of the impact of your dynamics processing.

The Wavescope module provides a real‑time waveform display for the audio signal. In the Settings, you can adjust the meter’s integration time (how long, in seconds, the ‘window’ is that you are seeing at any one time) and scale the display in various ways. This module does a pretty simple job but when combined with the Loudness module, and with identical instances of SuperVision placed both directly before your master bus processing and directly after, it can be really helpful in informing your processing decisions.

The first screenshot (above) shows an example. Top‑right in the MixConsole, you can see instances of SuperVision placed before and after my master bus processing chain. Comparing the two SuperVision instances, the numerical loudness data and visual waveform changes introduced by the processing are obvious. Such visual feedback is undoubtedly useful in terms of identifying any over‑ambitious loudness gains or potential destruction of transients. Of course, this approach isn’t just useful on your master bus; the feedback can be just as helpful on a drum or lead vocal bus.

Spectrum Curve and Multipanorama can provide visual assistance if you suspect other instruments are conflicting with the all‑important lead vocal.

Detecting Vocal Masking

Another two‑module combination, Spectrum Curve and Multipanorama, can provide visual assistance if you suspect other instruments are conflicting with the all‑important lead vocal. To make the visualisation easier, I’ve set up a stereo bus for my lead vocal that receives the vocal plus any of its send effects (reverb, delay, etc). In this mix, the main instruments ‘competing’ for frequency space with my vocal are some guitar and piano parts, and I have routed these to a further bus.

SuperVision’s side‑chain input option allows you to compare two audio sources in a number of the plug‑in’s modules.

SuperVision’s side‑chain input option allows you to compare two audio sources in a number of the plug‑in’s modules.

The second screenshot shows how you might configure an instance of SuperVision on the vocal bus described above. In this case, I’ve activated the external side‑chain input, and specified the guitar/piano bus as its source. The upper panel shows the Spectrum Curve module, and in the right drop‑down menu you can choose what frequency curves are displayed. In this example, I’ve selected both the main channel (in this case, the vocal, shown in blue) and the side‑chain input (the guitar/piano bus, in white). Within the Settings panel for the Spectrum Curve module, I’ve activated the Masking option. With this engaged, SuperVision highlights (using blue vertical bars) the frequencies in the main signal that are most likely to be masked by the side‑chain signal — for my example, this is the 300‑600 Hz range, making this an obvious target for some modest EQ cuts on the guitar/piano bus, if I find that I need to give my vocal a little extra space in the mix.

The Spectrum Curve and Multipanorama modules can provide a useful indication of potential masking of your lead vocal by other elements in your mix. If you take advantage of the ability to resize the SuperVision plug‑in, the Multipanorama display can reveal a lot of detail.The lower panel shows the Multipanorama module. This X‑Y display maps the audio intensity of a signal across the stereo image (horizontal axis) and frequency range (vertical axis). You can flip the axis and change the colour of the display in the module’s Settings panel. At present, for this module, you can only visualise one audio signal at a time in the display. Still with the module selected, you can use the top‑right drop‑down menu to decide whether the display shows the main audio channel or the side‑chain input, so you can easily flip between the two signals. Not only can you see the frequency ranges where they might clash, but you can also see whether those potential clashes apply to their stereo placement. This might inform your panning decisions for the instruments or, if you are feeling particularly brave, help with any Mid‑Sides EQ you might like to apply to the instrument bus, perhaps making frequency space for your vocal just in the centre of the stereo image, while being able to leave the instrument’s EQ intact towards the sides.

The Spectrum Curve and Multipanorama modules can provide a useful indication of potential masking of your lead vocal by other elements in your mix. If you take advantage of the ability to resize the SuperVision plug‑in, the Multipanorama display can reveal a lot of detail.The lower panel shows the Multipanorama module. This X‑Y display maps the audio intensity of a signal across the stereo image (horizontal axis) and frequency range (vertical axis). You can flip the axis and change the colour of the display in the module’s Settings panel. At present, for this module, you can only visualise one audio signal at a time in the display. Still with the module selected, you can use the top‑right drop‑down menu to decide whether the display shows the main audio channel or the side‑chain input, so you can easily flip between the two signals. Not only can you see the frequency ranges where they might clash, but you can also see whether those potential clashes apply to their stereo placement. This might inform your panning decisions for the instruments or, if you are feeling particularly brave, help with any Mid‑Sides EQ you might like to apply to the instrument bus, perhaps making frequency space for your vocal just in the centre of the stereo image, while being able to leave the instrument’s EQ intact towards the sides.

Of course, it would be great if the Multipanorama module could display both the main and side‑chain signals at the same time and better still if they could be colour coded. Perhaps that’s something the boffins at Steinberg can add at some point. It’s also worth noting that this module is very useful for judging the impact of any multiband stereo image processing you might apply using Cubase 11’s new (for Pro and Artist) Imager plug‑in... but that’s a topic for another day.

Thursday, November 20, 2025

Wednesday, November 19, 2025

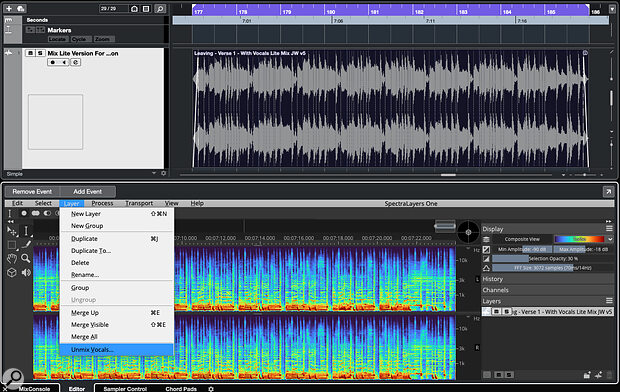

Cubase 11: Vocal Rebalancing With SpectraLayers One

By John Walden

SpectraLayers One is a powerful vocal unmixing tool for Cubase 11 Pro and Artist users.

SpectraLayers One is a powerful vocal unmixing tool for Cubase 11 Pro and Artist users.

SpectraLayers One allows you to tweak vocals that are ‘baked into’ a stereo mix!

Have you ever wished, when you don’t have access to the original multitracks, that you could have the ability to ‘unmix’ the different elements within a stereo file to gain access to individual parts — particularly the lead vocals?

Until recently this was rather like attempting to unbake a cake to access the eggs and flour, but the latest generation of spectral‑editing software makes it more viable and one such app, SpectraLayers One (from here SL One), is bundled with Cubase 11 Pro and Artist. SL One may be a cut‑down version of the separately available SpectraLayers Pro 7, but it does boast one of its bigger sibling’s eye‑catching features: stem unmixing. It can only create two stems (vocals and ‘everything else’), but that’s just what you need if you want to do some post‑mix vocal tweaking! In this workshop, I’ll explain what you can do with it, and you’ll find some accompanying audio examples on the SOS website (https://sosm.ag/cubase-0821). Alternatively, download the ZIP file of audio examples here:

You’re The One

SL One can run as a standalone app or as an Audio Extension on a Cubase audio clip within. The first screen shows the letter, which allows you to combine SL One processing with further processing and effects in Cubase. Once the Extension is applied, SP One and its various tools appear in Cubase’s Lower Zone.

The unmixing process requires almost no user intervention and is very fast!For our purposes, we require the Layer/Unmix Vocals option and, despite the under‑the‑hood complexity, it couldn’t be simpler to use: the only user‑defined control is the Sensitivity slider setting. The default ‘zero’ setting has generally provided the best results for me, but I’ve included an audio example (link above) to demonstrate the differences. Essentially, positive Sensitivity values put more audio into the vocal stem but may include more fragments of other mix elements too, while negative Sensitivity settings are less likely to place other instruments in the vocal stem but can leave more vocal trace elements in the ‘everything else’ stem. Depending on what you’re trying to achieve, either could be useful.

The unmixing process requires almost no user intervention and is very fast!For our purposes, we require the Layer/Unmix Vocals option and, despite the under‑the‑hood complexity, it couldn’t be simpler to use: the only user‑defined control is the Sensitivity slider setting. The default ‘zero’ setting has generally provided the best results for me, but I’ve included an audio example (link above) to demonstrate the differences. Essentially, positive Sensitivity values put more audio into the vocal stem but may include more fragments of other mix elements too, while negative Sensitivity settings are less likely to place other instruments in the vocal stem but can leave more vocal trace elements in the ‘everything else’ stem. Depending on what you’re trying to achieve, either could be useful.

Once the processing is complete, it can take a minute depending on the length of the audio clip. The two stems appear in SL One’s right‑hand panel, with mute and solo buttons that allow easy auditioning of the results. This unmixing process is non‑destructive: if you play back both stems together without further processing, they’ll sum to deliver the original mix. Auditioned in isolation, the vocal stem will inevitably show some artefacts (unless you started with a particularly sparse mix, with few other sounds overlapping the vocal). These artefacts may be non‑vocal elements (eg. traces of drums) or ‘missing’ vocal information that remains in the ‘everything else’ stem. You can hear elements of both types of artefact in the accompanying audio examples.

Whether you consider the vocal separation successful will depend on just what you hope to achieve. But given that vocal extraction/isolation is often a last resort, because the original mix project is unavailable, ‘usable’ can almost always be considered a positive outcome — so, are these sorts of results usable?

Balancing Act

Using these two unmixed stems to modify the level of the vocal relative to the instrumental backing is likely to be the easiest trick to pull off. For example, if we wished to raise the vocal level by a dB or three, we can do this by adjusting the gain of the vocal stem. While that will also adjust the level of any artefacts in the vocal stem, they are often difficult to detect in the context of the ‘reassembled’ mix.

Once separated, the stems created can be dragged and dropped to the Cubase project window for further processing or editing.

Once separated, the stems created can be dragged and dropped to the Cubase project window for further processing or editing.

When used in stand‑alone mode, SL One includes gain sliders for the two stems and these are not available when using the ARA2 plug‑in in Cubase. Instead, you can drag/drop each stem to a new Audio Track in your Cubase project. Not only does this allow you to rebalance them, but you can then apply further editing, audio processing, or automation to the individual stems, to improve the blend of your ‘rebalance’.

In my second audio example, gain changes ranging from ‑3 to +6 dB are applied to the vocal stem. Your mileage may vary, but to my ears all these rebalanced versions seem pretty effective and transparent. Rebalancing isn’t all that can be done though; as a final stage in this audio example, I’ve added a fresh dollop of both reverb and delay, just to show that more creative changes are also possible (so feel free to experiment!).

A second potential application of SL One’s unmixing facility is to silence the vocalist, to create a backing track. Such a vocal‑free backing track might be used, for example, for personal practice for a vocalist, as a karaoke track, or to generate an underscore music cue for film/TV use where the vocals might impinge upon dialogue. I’ll leave you to navigate any copyright implications, but as the third audio example demonstrates, SL One can take a good stab at this with minimum effort on the part of the user.

As the isolated vocal stem has revealed, the separation process is not perfect but, as a means of recusing an instrumental version from a full mix, the default results may well be acceptable in some contexts and a little additional editing can easily improve things further.

Ready (Re)Mix?

The Holy Grail of this unmixing process would be a perfectly isolated vocal track but unless your starting point is a very sparse mix, that’s an incredibly ‘big ask’. Yet, if your isolated vocal stem is intended for use in a remix or mashup project, in which it will be layered with a suitably busy backing track, then might SL One be up to the task?

The fourth example explores just how realistic this proposition might be...

SpectraLayers Pro can generate up to five stems from a stereo mix and, when used in standalone mode, both One and Pro offer gain controls for easy rebalancing of your stems.

SpectraLayers Pro can generate up to five stems from a stereo mix and, when used in standalone mode, both One and Pro offer gain controls for easy rebalancing of your stems.

I’ve taken the default unmixed vocal stem from our earlier short ‘verse’ audio mix (artefacts included) and built a new backing track for this extracted vocal. In the audio example, I’ve gradually added layers of instrumentation with each pass through the performance. Layered with a single instrument such as a strummed guitar or solo piano, the artefacts are audible — this might still be useful as a scratch vocal to build a ‘vocal + guitar’ or ‘vocal + piano’ remix project, but it’s unlikely to cut the mustard in a release context without considerably more detailed editing work or a new vocal recording. Note, though, that many of the artefacts in the vocal stem appear to be drum related and once new drums and bass are added to a remix, the majority of them are masked.

At this point, it’s not such a stretch to think that even some modest spectral editing on the vocal stem might generate a very usable vocal part, and as more layers are added to the instrumental bed (the final few passes in the audio example include multiple guitars and a synth) the artefacts become even less obvious. So if your remix has a prominent drum beat and doesn’t leave the extracted vocal too exposed, SL One’s vocal unmixing may well prove very usable.

Cubase 11’s SL One can make something that used to be difficult into something remarkably easy.

Density Matters

As noted earlier, the success of this vocal unmixing process is very much dependent on the original mix. The one I’ve used for the audio examples is what I’d call moderately dense, and SL One has done a commendable job. But what if your source mix is busier?

As a final audio example, I’ve used an alternative version of my short ‘verse’ clip that contained additional instruments (an extra guitar, a keyboard part and some harmony vocals). It’s no surprise that the resulting vocal stem has more artefacts. But, even if it would be more difficult to use the isolated vocal stem in a remix context, the vocal rebalancing and backing track creation discussed above remain distinctly possible.

SL One doesn’t handle the obvious legal obligations of publishing works using stems extracted from commercial recordings, but it does make the technical side of the ‘unmixing’ process remarkably easy and efficient. And, of course, it’s a freebie for Cubase 11 Pro and Artist users. Whether it’s vocal rebalancing, backing track creation or, with a bit of luck, vocal isolation, Cubase 11’s SL One can make something that used to be difficult into something remarkably easy.