By John Walden

VST Expression, introduced in Cubase 5, enables you to extract the best from multi‑articulation sample libraries.

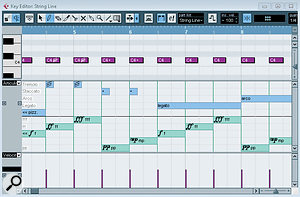

Expression can be added via the Articulation Lane in the Key Editor.

Expression can be added via the Articulation Lane in the Key Editor.

As anyone who plays a 'real' instrument (one that doesn't generate its sound by entirely electronic means) knows, recreating a convincing performance of the same instrument using samples can be a difficult task. The process is made somewhat easier if you're using a sampled instrument that includes a range of performance articulations, and this is most commonly controlled via 'keyswitches'. These are used to trigger different sample layers that contain the various performance options, but although this can work well, it can take a lot of work to become really fluent with it.

Steinberg designed their VST Expression system to make things simpler. Similar in concept to drum maps, this new function was first unleashed in Cubase 5 and is targeted at multiple articulations for a single instrument. The key strengths of this approach are twofold: it allows you to combine different approaches to generating articulations; and it gives you the ability to 'add' expression after the basic performance has been recorded. Steinberg included a small number of new instruments in HalionOne that support VST Expression but, as with drum maps, it's also possible to create bespoke VST Expression presets for third-party instruments.

Performance Enhancement

The screenshot above shows a MIDI part being edited in the Key Editor (the Cubase 5 Project containing this part can be downloaded from /sos/jan10/articles/cubasetechmedia.htm), played using the new HalionOne VST Expression-ready Tenor Sax instrument. (The new instruments have a 'VX' in the preset name, so it's easy to spot them.) If an instrument has an Expression Map assigned to it, the articulations can be added and edited in the Articulation Lane. For example, in this case, I've used the 'growl' and 'fall' articulations for particular notes in the phrase.

Adding articulations 'after the fact' as part of the editing process is often easier than trying to play keyswitches in 'live' via your keyboard. While the Key Editor's Articulation Lane provides the most obvious route to do this, another option is the Score Editor. Indeed, for those using Cubase to generate a score for other musicians, the fact that Expression Map articulations will appear in the printed score could prove very handy.

Map Reading

VST Expression articulations appear in the Score editor: a great facility for composers.

VST Expression articulations appear in the Score editor: a great facility for composers.

In order to use any of these articulation editing tools, the instrument needs an Expression Map. The basics of Expression Map creation is explained in the Cubase 5 Operation Manual, so I won't cover all that ground here. However, the manual deals with some significant details in rather a terse fashion (it's very much a manual rather than a tutorial). Perhaps the most useful of these is how articulations can adjust MIDI data in real time, so I'll focus on that.

The HalionOne Tenor Sax Expression Map contains five articulations, each based on a keyswitch that accesses a different performance layer (such as 'growl' or 'fall'), but Expression Maps aren't just about keyswitch options. They can be used to generate other forms of 'expression' by applying changes to the recorded MIDI data — and this allows you to get much more out of your sampled instrument, whether it has keyswitch articulations or not.

For example, let's imagine you wanted a phrase to be both staccato (short notes) and fortissimo (relatively loud). If you were using just keyswitched articulation changes, you might need an instrument with three sample layers: one for just staccato, a second for fortissimo, and a third for staccato and fortissimo. However, only the most detailed sample libraries are going to provide this degree of sample layer coverage for all the possible combinations of performance characteristics — and even then, it would eat up a lot of RAM to hold all the layers active.

You could, of course, emulate some of these performance variations via MIDI in the way you play; shorter notes for staccato and higher velocities for fortissimo. While you can do this as part of your performance, you can also add it after the fact via an Expression Map.

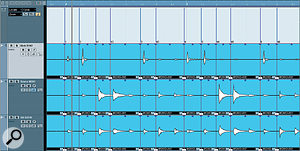

The screenshot (right) shows an example where five different performance types have been built from three articulations. In each case, the Output Mapping section has been used to change the MIDI data in real time on playback. For fortissimo (ff), I've increased the MIDI velocity to 150 percent of its actual value, while for staccato, I've shortened the length of the MIDI note to 20 percent of its actual value. And where both fortissimo and staccato are required, I've applied both of these changes.

Play, Then Shape

When using Expression Maps, the simplest method to build a performance uses four steps. Using the simple Expression Map described above (and which is also available from the link given earlier), the approach might be as follows.

1. Having created your MIDI track and linked it to the appropriate synth patch, open the VST Expression slot in the track's Inspector panel to select the Expression Map — or load it from a disk folder via the VST Expression Setup window if it doesn't already appear in the Inspector list.

2. Record the basic performance via your MIDI keyboard. Focus just on getting the right notes for now, rather than building expression into the performance.

3. Now for a couple of clean‑up tasks. First, apply any MIDI quantising you wish to use, to make expression elements within the Articulation Lane a little neater. Then, more importantly, use the MIDI/Functions/Velocity menu option to scale your overall MIDI velocity to somewhere in the middle of the velocity range. As any performance articulations you subsequently apply can include both increases and decreases in the MIDI velocity (to generate softer and louder passages), you need to make sure you have some room for manoeuvre: a fortissimo articulation won't work if you're already at the maximum MIDI velocity value before you apply it!

4. The final stage involves adding in your 'expression' using one of the MIDI editors — and the Articulation Lane in the Key Editor is the most obvious choice. If your Expression Map is set up correctly, this is easy to do and should help bring the performance to life.

The Right Direction

This Expression Map uses real‑time adjustment of MIDI data to create five articulations from three keyswitched sample layers.

This Expression Map uses real‑time adjustment of MIDI data to create five articulations from three keyswitched sample layers.

As shown in the Articulations section in the VST Expression window, an articulation can be set as either a Direction or an Attribute. Attributes apply to single notes, whereas Directions apply to all notes until the next Direction is received.

In our example, fortissimo is specified as a Direction and, once placed in the Articulation Lane, it will apply to all notes until a different Direction is applied. In contrast, I configured the staccato articulation as an Attribute, so it only applies to specific individual notes: once that note is played, the next note is played at its original length. Clearly, only one Direction can apply at any one time, but multiple Attributes can be combined with a Direction (just as I've added the staccato Attribute to the fortissimo Direction), to build different performance options. I'm sure there are some musical rules that, strictly speaking, dictate which performance options are conventionally regarded as Directions and which are regarded as Attributes, but when constructing your own VST Expression Maps, you might need to be a little more flexible in how you assign them in the Articulations panel, if you're to create the performance combinations you require.

Just An Expression?

Space precludes me delving more deeply into some of the other Expression Map options (for example, the use of Groups) in this month's column, but it's a subject I'll come back to. Meanwhile, combining the MIDI options described above with a sampled instrument that already includes keyswitched performance options, provides tremendous flexibility. For example, if your sampled instrument includes a staccato layer accessed via a keyswitch, you could use the MIDI‑based technique described above to generate different levels of dynamics (soft, normal, loud, very loud...), and the same principle could be applied to all of the keyswitch layers — which means that the potential for creating more believable sounding performances is considerable.

Steinberg's web site hosts a selection of Expression Maps for some third-party sample libraries (www.steinberg.net/index.php?id=1944&L=1), and this list should grow over time. Until then, building your own Expression Maps for particular instruments isn't that difficult, provided you're prepared for some initial experimentation until you get your head around the way that various elements slot together. As a starting point, I've placed a second — and rather more detailed — MIDI‑based Expression Map on the SOS web site (see web address given earlier), so go on: express yourself!

Published January 2010